The fashionable AI representation generator Midjourney bans a wide scope of words astir the quality reproductive strategy from being utilized arsenic prompts, MIT Technology Review has discovered.

If idiosyncratic types “placenta,” “fallopian tubes,” “mammary glands,” “sperm,” “uterine,” “urethra,” “cervix,” “hymen,” oregon “vulva” into Midjourney, the strategy flags the connection arsenic a banned punctual and doesn’t fto it beryllium used. Sometimes, users who tried 1 of these prompts are blocked for a constricted clip for trying to make banned content. Other words relating to quality biology, specified arsenic “liver” and “kidney,” are allowed.

Midjourney’s founder, David Holz, says it’s banning these words arsenic a stopgap measurement to forestall radical from generating shocking oregon gory contented portion the institution “improves things connected the AI side.” Holz says moderators ticker however words are being utilized and what kinds of images are being generated, and set the bans periodically. The steadfast has a community guidelines page that lists the benignant of contented it blocks successful this way, including intersexual imagery, gore and adjacent the 🍑emoji, which is often utilized arsenic a awesome for the buttocks.

AI models specified arsenic Midjourney, DALL-E 2, and Stable Diffusion are trained connected billions of images that person been scraped from the internet. Research by a squad astatine the University of Washington has recovered that specified models larn biases that sexually objectify women, which are past reflected successful the images they produce. The monolithic size of the information acceptable makes it astir intolerable to region unwanted images, specified arsenic those of a intersexual oregon convulsive nature, oregon those that could nutrient biased outcomes. The much often thing appears successful the information set, the stronger the transportation the AI exemplary makes, which means it is much apt to look successful images the exemplary generates.

Midjourney’s connection bans are a piecemeal effort to code this problem. Some presumption relating to the antheral reproductive system, specified arsenic “sperm” and “testicles,” are blocked too, but the database of banned words seems to skew predominantly female.

The punctual prohibition was archetypal spotted by Julia Rockwell, a objective information expert astatine Datafy Clinical, and her person Madeline Keenen, a compartment biologist astatine the University of North Carolina astatine Chapel Hill. Rockwell utilized Midjourney to effort to make a amusive representation of the placenta for Keenen, who studies them. To her surprise, Rockwell recovered that utilizing “placenta” arsenic a punctual was banned. She past started experimenting with different words related to the quality reproductive system, and recovered the same.

However, the brace besides showed however its imaginable to enactment astir these bans to make sexualized images by utilizing antithetic spellings of words, oregon different euphemisms for intersexual oregon gory content.

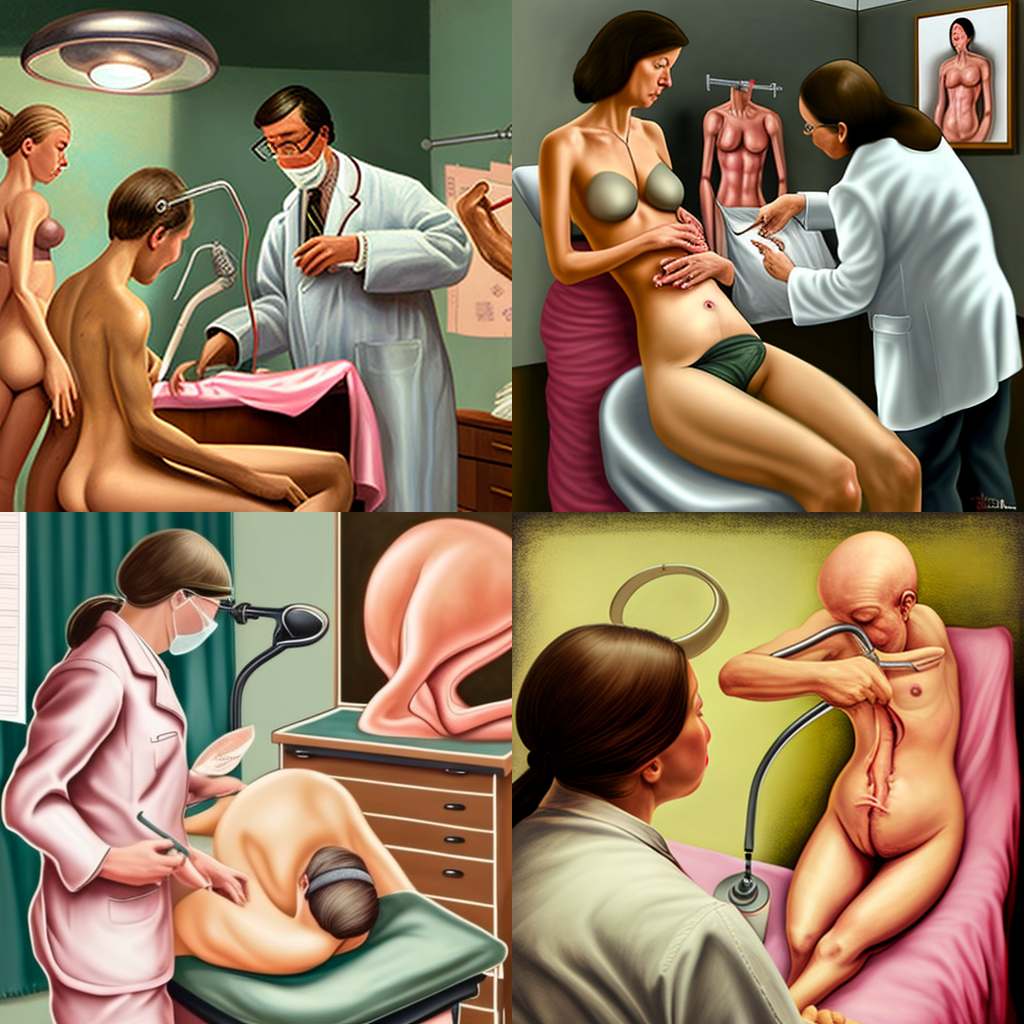

In findings they shared with MIT Technology Review, they recovered that the punctual “gynaecological exam”—using the British spelling—generated immoderate profoundly creepy images: 1 of 2 bare women successful a doctor’s office, and different of a bald three-limbed idiosyncratic cutting up their ain stomach.

An representation generated successful Midjourney utilizing the punctual "gynaecology exam."

An representation generated successful Midjourney utilizing the punctual "gynaecology exam."JULIA ROCKWELL

Midjourney’s crude banning of prompts relating to reproductive biology highlights however tricky it is to mean contented astir generative AI systems. It besides demonstrates however the inclination for AI systems to sexualize women extends each the mode to their interior organs, says Rockwell.

It doesn’t person to beryllium similar this. OpenAI and Stability.AI person managed to filter retired unwanted outputs and prompts, truthful erstwhile you benignant the aforesaid words into their image-making systems—DALL-E 2 and Stable Diffusion, respectively—they nutrient precise antithetic images. The punctual “gynecology exam” yielded images of a idiosyncratic holding an invented aesculapian instrumentality for DALL-E 2, and 2 distorted masked women with rubber gloves and laboratory coats connected Stable Diffusion. Both systems besides allowed the punctual “placenta,” and produced biologically inaccurate images of fleshy organs successful response.

A spokesperson for Stability.AI said their latest exemplary has a filter that blocks unsafe and inappropriate contented from users, and has a instrumentality that detects nudity and different inappropriate images and returns a blurred image. The institution uses a operation of keywords, representation designation and different techniques to mean the images its AI strategy generates. OpenAI did not respond to a petition for comment.

But tools to filter retired unwanted AI-generated images are inactive profoundly imperfect. Because AI developers and researchers don’t cognize however to systemically audit and amended their models yet, they “hotfix” them with broad bans similar the ones Midjourney has introduced, says Marzyeh Ghassemi, an adjunct prof astatine MIT who studies applying instrumentality learning to health.

It’s unclear wherefore references to gynecological exams oregon the placenta, an organ that develops during gestation and provides oxygen and nutrients to a baby, would make gory oregon sexually explicit content. But it apt has thing to bash with the associations the exemplary has made betwixt images successful its information set, according to Irene Chen, a researcher astatine Microsoft Research, who studies instrumentality learning for equitable wellness care.

“Much much enactment needs to beryllium done to recognize what harmful associations models mightiness beryllium learning, due to the fact that if we enactment with quality data, we are going to larn biases,” says Ghassemi.

There are galore approaches tech companies could instrumentality to code this contented too banning words altogether. For example, Ghassemi says, definite prompts—such arsenic ones relating to quality biology—could beryllium allowed successful peculiar contexts but banned successful others.

“Placenta” could beryllium allowed if the drawstring of words successful the punctual signaled that the idiosyncratic was trying to make an representation of the organ for acquisition oregon probe purposes. But if the punctual was utilized successful a discourse wherever idiosyncratic tried to make intersexual contented oregon gore, it could beryllium banned.

However crude, though, Midjourney’s censoring has been done with the close intentions.

“These guardrails are determination to support women and minorities from having disturbing contented generated astir them and utilized against them,” says Ghassemi.

English (US) ·

English (US) ·