It was wide that OpenAI was connected to something. In precocious 2021, a tiny squad of researchers was playing astir with an thought astatine the company’s San Francisco office. They’d built a caller mentation of OpenAI’s text-to-image model, DALL-E, an AI that converts abbreviated written descriptions into pictures: a fox painted by Van Gogh, perhaps, oregon a corgi made of pizza. Now they conscionable had to fig retired what to bash with it.

“Almost always, we physique thing and past we each person to usage it for a while,” Sam Altman, OpenAI’s cofounder and CEO, tells MIT Technology Review. “We effort to fig retired what it’s going to be, what it’s going to beryllium utilized for.”

Not this time. As they tinkered with the model, everyone progressive realized this was thing special. “It was precise wide that this was it—this was the product,” says Altman. “There was nary debate. We ne'er adjacent had a gathering astir it.”

But nobody—not Altman, not the DALL-E team—could person predicted conscionable however large a splash this merchandise was going to make. “This is the archetypal AI exertion that has caught occurrence with regular people,” says Altman.

DALL-E 2 dropped in April 2022. In May, Google announced (but did not release) 2 text-to-image models of its own, Imagen and Parti. Then came Midjourney, a text-to-image exemplary made for artists. And August brought Stable Diffusion, an open-source exemplary that the UK-based startup Stability AI has released to the nationalist for free.

The doors were disconnected their hinges. OpenAI signed up a cardinal users successful conscionable 2.5 months. More than a cardinal radical started utilizing Stable Diffusion via its paid-for work Dream Studio successful little than fractional that time; galore much utilized Stable Diffusion done third-party apps oregon installed the escaped mentation connected their ain computers. (Emad Mostaque, Stability AI’s founder, says he’s aiming for a billion users.)

And past successful October we had Round Two: a spate of text-to-video models from Google, Meta, and others. Instead of conscionable generating inactive images, these tin make abbreviated video clips, animations, and 3D pictures.

The gait of improvement has been breathtaking. In conscionable a fewer months, the exertion has inspired hundreds of paper headlines and mag covers, filled societal media with memes, kicked a hype instrumentality into overdrive—and acceptable disconnected an aggravated backlash.

This communicative is portion of our upcoming 10 Breakthrough Technologies 2023 series. Sign up for The Download to get the afloat database successful January.

“The daze and awe of this exertion is amazing—and it’s fun, it’s what caller exertion should be,” says Mike Cook, an AI researcher astatine King’s College London who studies computational creativity. “But it’s moved truthful accelerated that your archetypal impressions are being updated earlier you adjacent get utilized to the idea. I deliberation we’re going to walk a portion digesting it arsenic a society.”

Artists are caught successful the mediate of 1 of the biggest upheavals successful a generation. Some volition suffer work; immoderate volition find caller opportunities. A fewer are headed to the courts to combat ineligible battles implicit what they presumption arsenic the misappropriation of images to bid models that could regenerate them.

Creators were caught disconnected guard, says Don Allen Stevenson III, a integer creator based successful California who has worked astatine visual-effects studios specified arsenic DreamWorks. “For technically trained folks similar myself, it’s precise scary. You’re like, ‘Oh my god—that’s my full job,’” helium says. “I went into an existential situation for the archetypal period of utilizing DALL-E.”

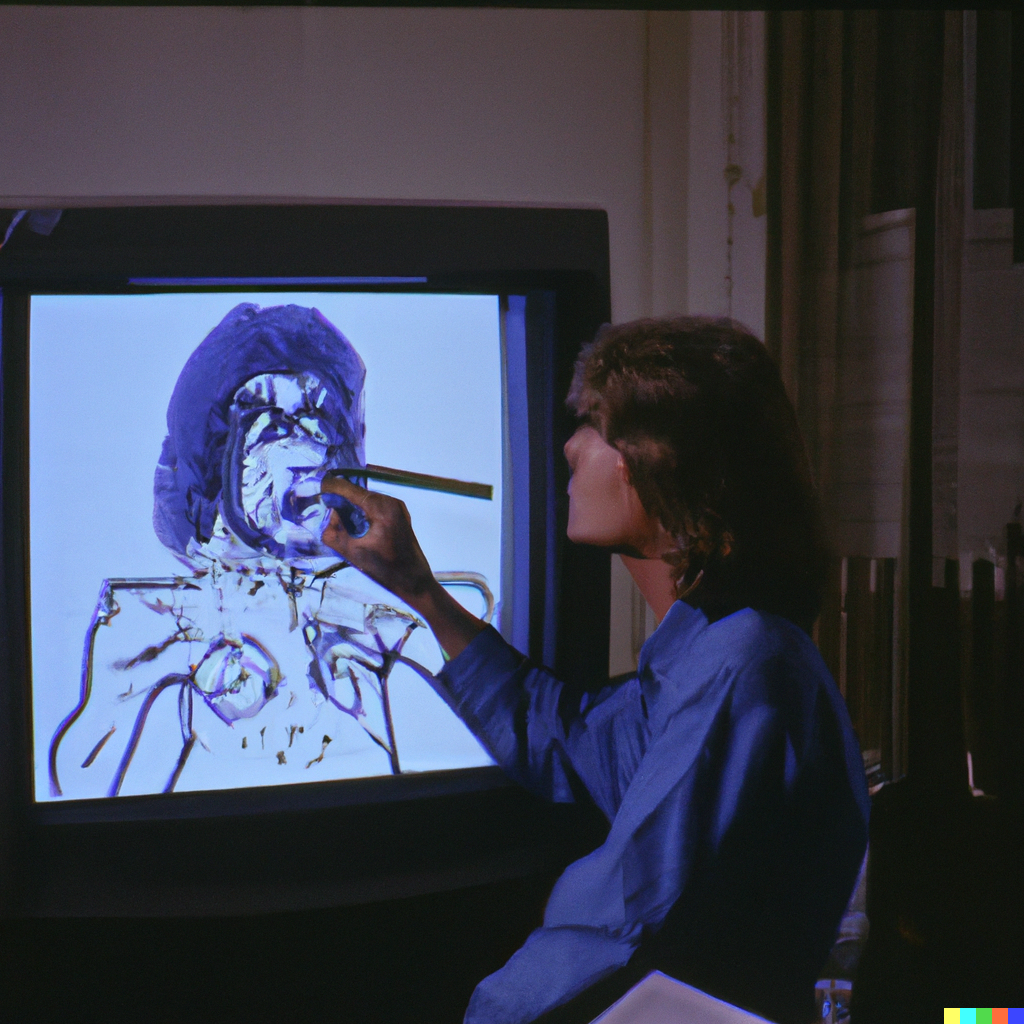

The representation supra is based connected a saltation of the punctual that created the last art. "After landing connected an representation I was blessed with, I went successful and made adjustments to cleanable up immoderate AI artifacts and marque it look much 'real.' I'm a large instrumentality of sci-fi from that era," explains Erik Carter.

The representation supra is based connected a saltation of the punctual that created the last art. "After landing connected an representation I was blessed with, I went successful and made adjustments to cleanable up immoderate AI artifacts and marque it look much 'real.' I'm a large instrumentality of sci-fi from that era," explains Erik Carter.ERIK CARTER VIA DALL-E 2

But portion immoderate are inactive reeling from the shock, many—including Stevenson—are uncovering ways to enactment with these tools and expect what comes next.

The breathtaking information is, we don’t truly know. For portion originative industries—from amusement media to fashion, architecture, marketing, and more—will consciousness the interaction first, this tech volition springiness originative superpowers to everybody. In the longer term, it could beryllium utilized to make designs for astir anything, from caller types of drugs to apparel and buildings. The generative gyration has begun.

A magical revolution

For Chad Nelson, a integer creator who has worked connected video games and TV shows, text-to-image models are a once-in-a-lifetime breakthrough. “This tech takes you from that lightbulb successful your caput to a archetypal sketch successful seconds,” helium says. “The velocity astatine which you tin make and research is revolutionary—beyond thing I’ve experienced successful 30 years.”

Within weeks of their debut, radical were utilizing these tools to prototype and brainstorm everything from mag illustrations and selling layouts to video-game environments and movie concepts. People generated instrumentality art, adjacent full comic books, and shared them online successful the thousands. Altman adjacent utilized DALL-E to make designs for sneakers that idiosyncratic past made for him aft helium tweeted the image.

Amy Smith, a machine idiosyncratic astatine Queen Mary University of London and a tattoo artist, has been utilizing DALL-E to plan tattoos. “You tin beryllium down with the lawsuit and make designs together,” she says. “We’re successful a gyration of media generation.”

Paul Trillo, a integer and video creator based successful California, thinks the exertion volition marque it easier and faster to brainstorm ideas for ocular effects. “People are saying this is the decease of effects artists, oregon the decease of manner designers,” helium says. “I don’t deliberation it’s the decease of anything. I deliberation it means we don’t person to enactment nights and weekends.”

Stock representation companies are taking antithetic positions. Getty has banned AI-generated images. Shutterstock has signed a woody with OpenAI to embed DALL-E successful its website and says it volition commencement a money to reimburse artists whose enactment has been utilized to bid the models.

Stevenson says helium has tried retired DALL-E astatine each measurement of the process that an animation workplace uses to nutrient a film, including designing characters and environments. With DALL-E, helium was capable to bash the enactment of aggregate departments successful a fewer minutes. “It’s uplifting for each the folks who’ve ne'er been capable to make due to the fact that it was excessively costly oregon excessively technical,” helium says. “But it’s terrifying if you’re not unfastened to change.”

Nelson thinks there’s inactive much to come. Eventually, helium sees this exertion being embraced not lone by media giants but besides by architecture and plan firms. It’s not acceptable yet, though, helium says.

“Right present it’s similar you person a small magic box, a small wizard,” helium says. That’s large if you conscionable privation to support generating images, but not if you request a originative partner. “If I privation it to make stories and physique worlds, it needs acold much consciousness of what I’m creating,” helium says.

That’s the problem: these models inactive person nary thought what they’re doing.

Inside the achromatic box

To spot why, let’s look astatine however these programs work. From the outside, the bundle is simply a achromatic box. You benignant successful a abbreviated description—a prompt—and past hold a fewer seconds. What you get backmost is simply a fistful of images that acceptable that punctual (more oregon less). You whitethorn person to tweak your substance to coax the exemplary to nutrient thing person to what you had successful mind, oregon to hone a serendipitous result. This has go known arsenic punctual engineering.

Prompts for the astir detailed, stylized images tin tally to respective 100 words, and wrangling the close words has go a invaluable skill. Online marketplaces person sprung up wherever prompts known to nutrient desirable results are bought and sold.

Prompts tin incorporate phrases that instruct the exemplary to spell for a peculiar style: “trending connected ArtStation” tells the AI to mimic the (typically precise detailed) benignant of images fashionable connected ArtStation, a website wherever thousands of artists showcase their work; “Unreal engine” invokes the acquainted graphic benignant of definite video games; and truthful on. Users tin adjacent participate the names of circumstantial artists and person the AI nutrient pastiches of their work, which has made immoderate artists precise unhappy.

ERIK CARTER VIA DALL-E 2

ERIK CARTER VIA DALL-E 2

ERIK CARTER VIA DALL-E 2

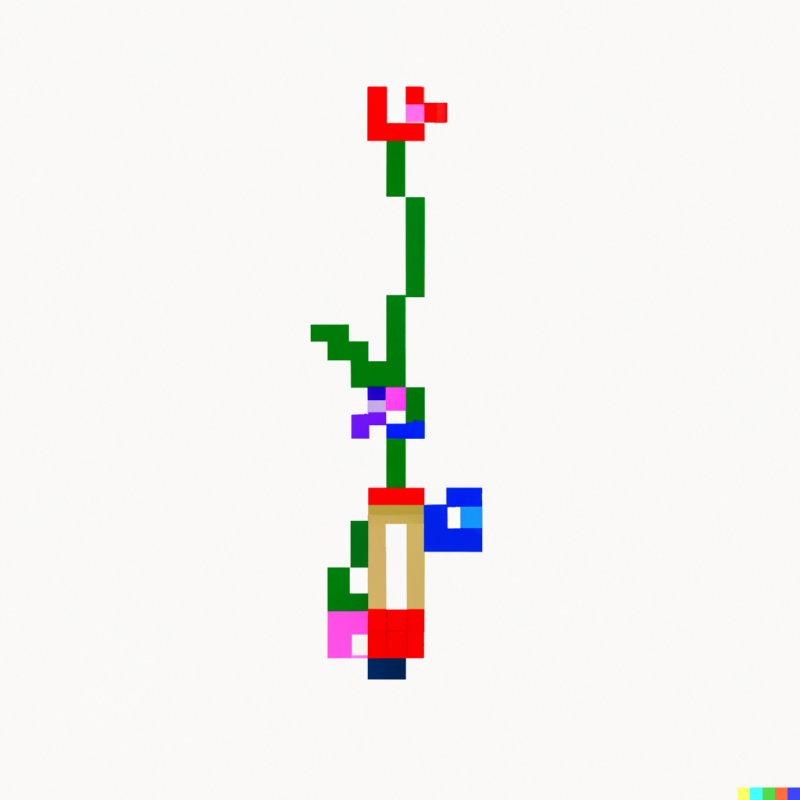

"I tried to metaphorically correspond AI with the punctual 'the Big Bang' and ended up with these abstract bubble-like forms (right). It wasn't precisely what I wanted, truthful past I went much literal with 'explosion successful outer abstraction 1980s photograph' (left), which seemed excessively aggressive. I besides tried increasing immoderate integer plants by putting successful 'plant 8-bit pixel art' (center)."

Under the hood, text-to-image models person 2 cardinal components: 1 neural web trained to brace an representation with substance that describes that image, and different trained to make images from scratch. The basal thought is to get the 2nd neural web to make an representation that the archetypal neural web accepts arsenic a lucifer for the prompt.

The large breakthrough down the caller models is successful the mode images get generated. The archetypal mentation of DALL-E utilized an hold of the exertion down OpenAI’s connection exemplary GPT-3, producing images by predicting the adjacent pixel successful an representation arsenic if they were words successful a sentence. This worked, but not well. “It was not a magical experience,” says Altman. “It’s astonishing that it worked astatine all.”

Instead, DALL-E 2 uses thing called a diffusion model. Diffusion models are neural networks trained to cleanable images up by removing pixelated sound that the grooming process adds. The process involves taking images and changing a fewer pixels successful them astatine a time, implicit galore steps, until the archetypal images are erased and you’re near with thing but random pixels. “If you bash this a 1000 times, yet the representation looks similar you person plucked the antenna cablegram from your TV set—it’s conscionable snow,” says Björn Ommer, who works connected generative AI astatine the University of Munich successful Germany and who helped physique the diffusion exemplary that present powers Stable Diffusion.

The neural web is past trained to reverse that process and foretell what the little pixelated mentation of a fixed representation would look like. The upshot is that if you springiness a diffusion exemplary a messiness of pixels, it volition effort to make thing a small cleaner. Plug the cleaned-up representation backmost in, and the exemplary volition nutrient thing cleaner still. Do this capable times and the exemplary tin instrumentality you each the mode from TV snowfall to a high-resolution picture.

AI creation generators ne'er enactment precisely however you privation them to. They often nutrient hideous results that tin lucifer distorted banal art, astatine best. In my experience, the lone mode to truly marque the enactment look bully is to adhd descriptor astatine the extremity with a benignant that looks aesthetically pleasing.

~Erik CarterThe instrumentality with text-to-image models is that this process is guided by the connection exemplary that’s trying to lucifer a punctual to the images the diffusion exemplary is producing. This pushes the diffusion exemplary toward images that the connection exemplary considers a bully match.

But the models aren’t pulling the links betwixt substance and images retired of bladed air. Most text-to-image models contiguous are trained connected a ample information acceptable called LAION, which contains billions of pairings of substance and images scraped from the internet. This means that the images you get from a text-to-image exemplary are a distillation of the satellite arsenic it’s represented online, distorted by prejudice (and pornography).

One past thing: there’s a tiny but important quality betwixt the 2 astir fashionable models, DALL-E 2 and Stable Diffusion. DALL-E 2’s diffusion exemplary works connected full-size images. Stable Diffusion, connected the different hand, uses a method called latent diffusion, invented by Ommer and his colleagues. It works connected compressed versions of images encoded wrong the neural web successful what’s known arsenic a latent space, wherever lone the indispensable features of an representation are retained.

This means Stable Diffusion requires little computing musculus to work. Unlike DALL-E 2, which runs connected OpenAI’s almighty servers, Stable Diffusion tin tally connected (good) idiosyncratic computers. Much of the detonation of creativity and the accelerated improvement of caller apps is owed to the information that Stable Diffusion is some unfastened source—programmers are escaped to alteration it, physique connected it, and marque wealth from it—and lightweight capable for radical to tally astatine home.

Redefining creativity

For some, these models are a measurement toward artificial wide intelligence, oregon AGI—an over-hyped buzzword referring to a aboriginal AI that has general-purpose oregon adjacent human-like abilities. OpenAI has been explicit astir its extremity of achieving AGI. For that reason, Altman doesn’t attraction that DALL-E 2 present competes with a raft of akin tools, immoderate of them free. “We’re present to marque AGI, not representation generators,” helium says. “It volition acceptable into a broader merchandise roadworthy map. It’s 1 smallish constituent of what an AGI volition do.”

That’s optimistic, to accidental the least—many experts judge that today’s AI volition ne'er scope that level. In presumption of basal intelligence, text-to-image models are nary smarter than the language-generating AIs that underpin them. Tools similar GPT-3 and Google’s PaLM regurgitate patterns of substance ingested from the galore billions of documents they are trained on. Similarly, DALL-E and Stable Diffusion reproduce associations betwixt substance and images recovered crossed billions of examples online.

The results are dazzling, but poke excessively hard and the illusion shatters. These models marque basal howlers—responding to “salmon successful a river” with a representation of chopped-up fillets floating downstream, oregon to “a bat flying implicit a shot stadium” with a representation of some a flying mammal and a woody stick. That’s due to the fact that they are built connected apical of a exertion that is obscurity adjacent to knowing the satellite arsenic humans (or adjacent astir animals) do.

Even so, it whitethorn beryllium conscionable a substance of clip earlier these models larn amended tricks. “People accidental it’s not precise bully astatine this happening now, and of people it isn’t,” says Cook. “But a 100 cardinal dollars later, it could good be.”

That’s surely OpenAI’s approach.

“We already cognize however to marque it 10 times better,” says Altman. “We cognize determination are logical reasoning tasks that it messes up. We’re going to spell down a database of things, and we’ll enactment retired a caller mentation that fixes each of the existent problems.”

If claims astir quality and knowing are overblown, what astir creativity? Among humans, we accidental that artists, mathematicians, entrepreneurs, kindergarten kids, and their teachers are each exemplars of creativity. But getting astatine what these radical person successful communal is hard.

For some, it’s the results that substance most. Others reason that the mode things are made—and whether determination is intent successful that process—is paramount.

Still, galore autumn backmost connected a explanation fixed by Margaret Boden, an influential AI researcher and philosopher astatine the University of Sussex, UK, who boils the conception down to 3 cardinal criteria: to beryllium creative, an thought oregon an artifact needs to beryllium new, surprising, and valuable.

Beyond that, it’s often a lawsuit of knowing it erstwhile you spot it. Researchers successful the tract known arsenic computational creativity picture their enactment arsenic utilizing computers to nutrient results that would beryllium considered originative if produced by humans alone.

Smith is truthful blessed to telephone this caller breed of generative models creative, contempt their stupidity. “It is precise wide that determination is innovation successful these images that is not controlled by immoderate quality input,” she says. “The translation from substance to representation is often astonishing and beautiful.”

Maria Teresa Llano, who studies computational creativity astatine Monash University successful Melbourne, Australia, agrees that text-to-image models are stretching erstwhile definitions. But Llano does not deliberation they are creative. When you usage these programs a lot, the results tin commencement to go repetitive, she says. This means they autumn abbreviated of immoderate oregon each of Boden’s requirements. And that could beryllium a cardinal regulation of the technology. By design, a text-to-image exemplary churns retired caller images successful the likeness of billions of images that already exist. Perhaps instrumentality learning volition lone ever nutrient images that imitate what it’s been exposed to successful the past.

That whitethorn not substance for machine graphics. Adobe is already gathering text-to-image procreation into Photoshop; Blender, Photoshop’s open-source cousin, has a Stable Diffusion plug-in. And OpenAI is collaborating with Microsoft connected a text-to-image widget for Office.

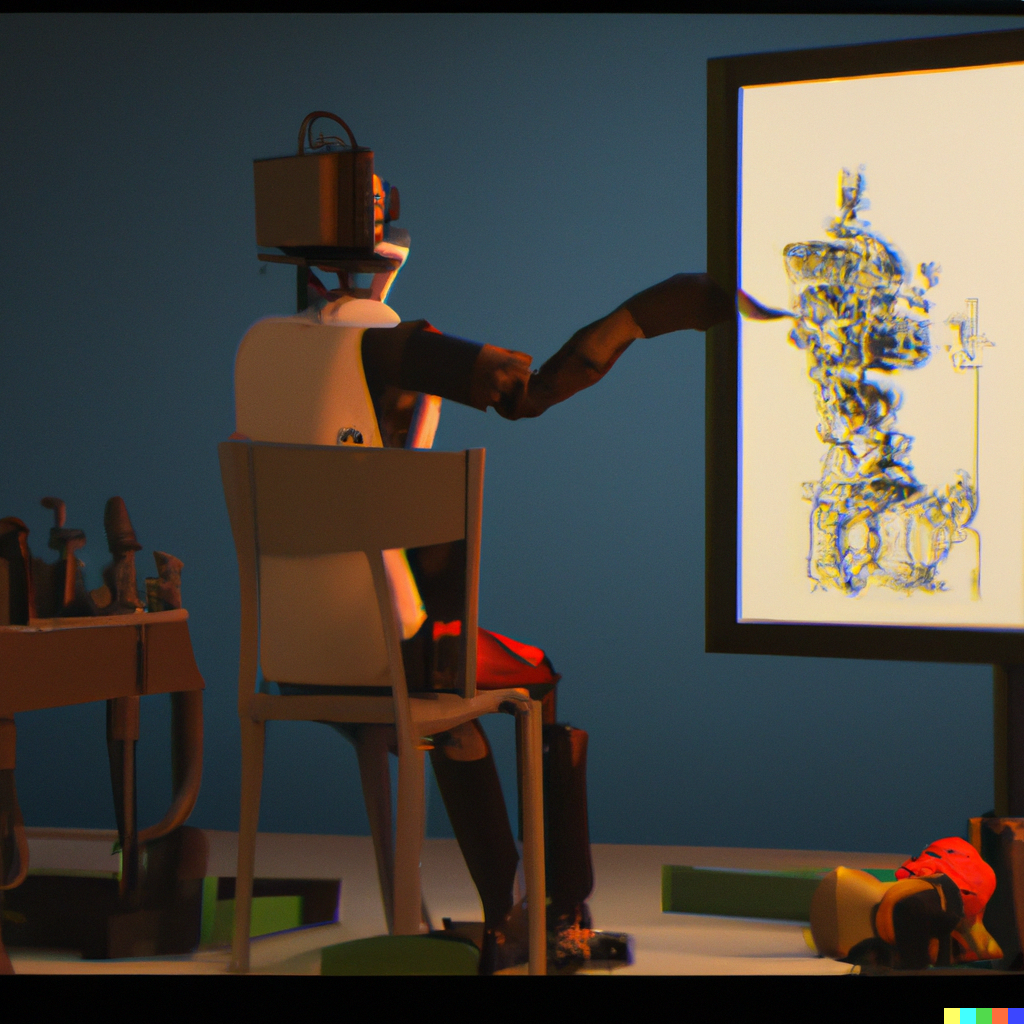

DALL-E 2 accepts either an representation oregon written substance arsenic a prompt. The representation supra was created by uploading Erik Carter's last representation backmost into DALL-E 2 arsenic a prompt.

DALL-E 2 accepts either an representation oregon written substance arsenic a prompt. The representation supra was created by uploading Erik Carter's last representation backmost into DALL-E 2 arsenic a prompt.ERIK CARTER VIA DALL-E 2

It is successful this benignant of interaction, successful aboriginal versions of these acquainted tools, that the existent interaction whitethorn beryllium felt: from machines that don’t regenerate quality creativity but heighten it. “The creativity we spot contiguous comes from the usage of the systems, alternatively than from the systems themselves,” says Llano—from the back-and-forth, call-and-response required to nutrient the effect you want.

This presumption is echoed by different researchers successful computational creativity. It’s not conscionable astir what these machines do; it’s however they bash it. Turning them into existent originative partners means pushing them to beryllium much autonomous, giving them originative responsibility, getting them to curate arsenic good arsenic create.

Aspects of that volition travel soon. Someone has already written a programme called CLIP Interrogator that analyzes an representation and comes up with a punctual to make much images similar it. Others are utilizing instrumentality learning to augment elemental prompts with phrases designed to springiness the representation other prime and fidelity—effectively automating punctual engineering, a task that has lone existed for a fistful of months.

Meanwhile, arsenic the flood of images continues, we’re laying down different foundations too. “The net is present everlastingly contaminated with images made by AI,” says Cook. “The images that we made successful 2022 volition beryllium a portion of immoderate exemplary that is made from present on.”

Coming soon:

A caller study astir however concern plan and engineering firms are utilizing generative AI.

Sign up to get notified erstwhile it’s out.

We volition person to hold to spot precisely what lasting interaction these tools volition person connected originative industries, and connected the full tract of AI. Generative AI has go 1 much instrumentality for expression. Altman says helium present uses generated images successful idiosyncratic messages the mode helium utilized to usage emoji. “Some of my friends don’t adjacent fuss to make the image—they benignant the prompt,” helium says.

But text-to-image models whitethorn beryllium conscionable the start. Generative AI could yet beryllium utilized to nutrient designs for everything from caller buildings to caller drugs—think text-to-X.

“People are going to recognize that method oregon trade is nary longer the barrier—it’s present conscionable their quality to imagine,” says Nelson.

Computers are already utilized successful respective industries to make immense numbers of imaginable designs that are past sifted for ones that mightiness work. Text-to-X models would let a quality decorator to fine-tune that generative process from the start, utilizing words to usher computers done an infinite fig of options toward results that are not conscionable imaginable but desirable.

Computers tin conjure spaces filled with infinite possibility. Text-to-X volition fto america research those spaces utilizing words.

“I deliberation that’s the legacy,” says Altman. “Images, video, audio—eventually, everything volition beryllium generated. I deliberation it is conscionable going to seep everywhere.”

English (US) ·

English (US) ·