Much of this contented is benign—animals successful adorable outfits, envy-inspiring abrogation photos, oregon enthusiastic reviews of bath pillows. But immoderate of it is problematic, encompassing convulsive imagery, mis- and disinformation, harassment, oregon different harmful material. In the U.S., 4 successful 10 Americans report they’ve been harassed online. In the U.K., 84% of net users fear exposure to harmful content.

Consequently, contented moderation—the monitoring of UGC—is indispensable for online experiences. In his publication Custodians of the Internet, sociologist Tarleton Gillespie writes that effectual contented moderation is indispensable for integer platforms to function, contempt the “utopian notion” of an unfastened internet. “There is nary level that does not enforce rules, to immoderate degree—not to bash truthful would simply beryllium untenable,” helium writes. “Platforms must, successful immoderate signifier oregon another, moderate: some to support 1 idiosyncratic from another, oregon 1 radical from its antagonists, and to region the offensive, vile, oregon illegal—as good arsenic to contiguous their champion look to caller users, to their advertisers and partners, and to the nationalist astatine large.”

Content moderation is utilized to code a wide scope of content, crossed industries. Skillful contented moderation tin assistance organizations support their users safe, their platforms usable, and their reputations intact. A champion practices attack to contented moderation draws connected progressively blase and close method solutions portion backstopping those efforts with quality accomplishment and judgment.

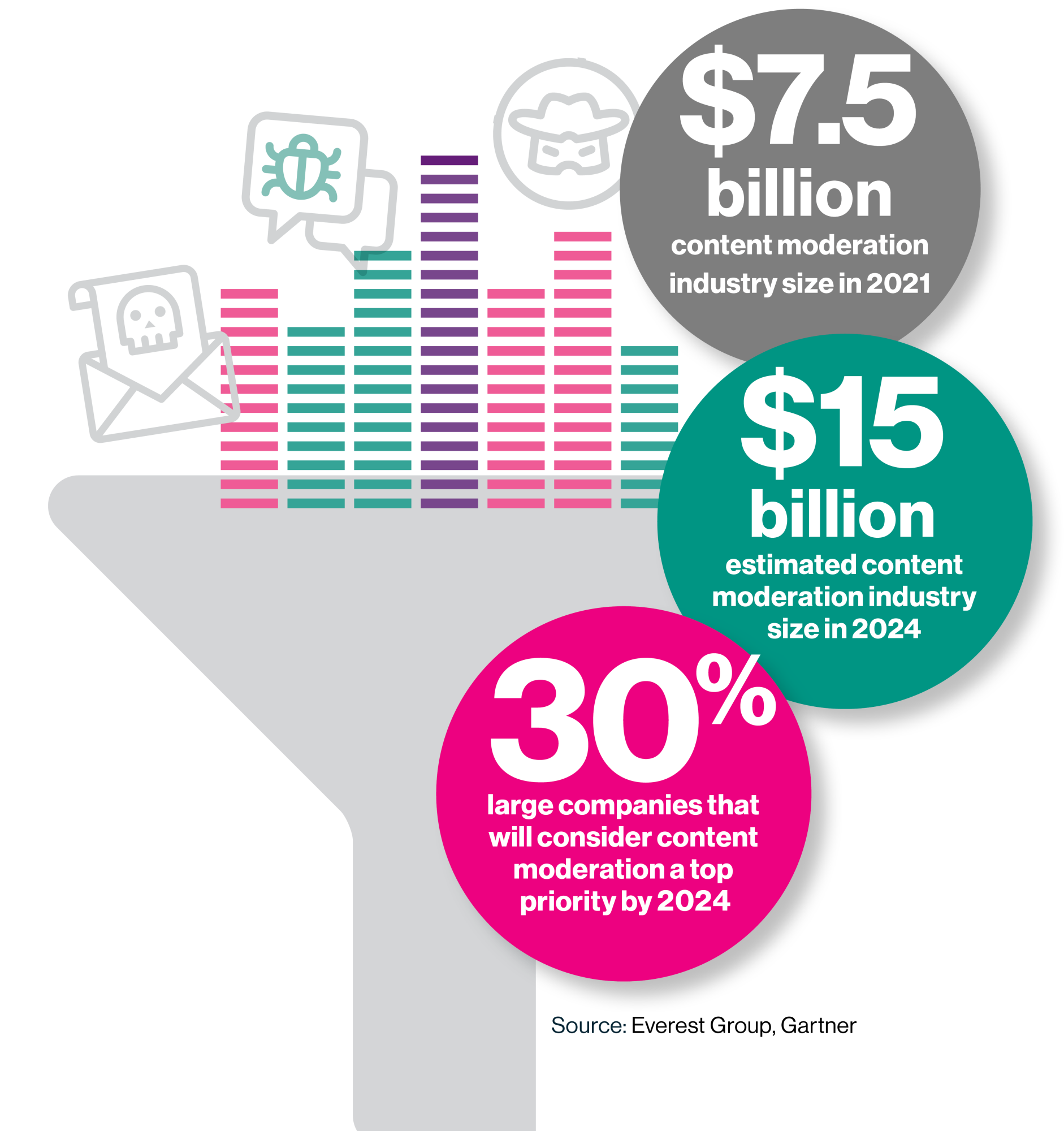

Content moderation is simply a rapidly increasing industry, captious to each organizations and individuals who stitchery successful integer spaces (which is to say, more than 5 cardinal people). According to Abhijnan Dasgupta, signifier manager specializing successful spot and information (T&S) astatine Everest Group, the manufacture was valued astatine astir $7.5 cardinal successful 2021—and experts expect that fig volition treble by 2024. Gartner research suggests that astir one-third (30%) of ample companies volition see contented moderation a apical precedence by 2024.

Content moderation: More than societal media

Content moderators region hundreds of thousands of pieces of problematic contented each day. Facebook’s Community Standards Enforcement Report, for example, documents that successful Q3 2022 alone, the institution removed 23.2 cardinal incidences of convulsive and graphic contented and 10.6 cardinal incidences of hatred speech—in summation to 1.4 cardinal spam posts and 1.5 cardinal fake accounts. But though societal media whitethorn beryllium the astir wide reported example, a immense fig of industries trust connected UGC—everything from merchandise reviews to lawsuit work interactions—and consequently necessitate contented moderation.

“Any tract that allows accusation to travel successful that’s not internally produced has a request for contented moderation,” explains Mary L. Gray, a elder main researcher astatine Microsoft Research who besides serves connected the module of the Luddy School of Informatics, Computing, and Engineering astatine Indiana University. Other sectors that trust heavy connected contented moderation see telehealth, gaming, e-commerce and retail, and the nationalist assemblage and government.

In summation to removing violative content, contented moderation tin observe and destruct bots, place and region fake idiosyncratic profiles, code phony reviews and ratings, delete spam, constabulary deceptive advertising, mitigate predatory contented (especially that which targets minors), and facilitate harmless two-way communications

in online messaging systems. One country of superior interest is fraud, particularly connected e-commerce platforms. “There are a batch of atrocious actors and scammers trying to merchantability fake products—and there’s besides a large occupation with fake reviews,” says Akash Pugalia, the planetary president of spot and information astatine Teleperformance, which provides non-egregious contented moderation enactment for planetary brands. “Content moderators assistance guarantee products travel the platform’s guidelines, and they besides region prohibited goods.”

This contented was produced by Insights, the customized contented limb of MIT Technology Review. It was not written by MIT Technology Review’s editorial staff.

English (US) ·

English (US) ·