Oregon 6th Congressional District campaigner Carrick Flynn seemed to driblet retired of the sky. With a stint astatine Oxford’s Future of Humanity Institute, a way grounds of voting successful lone 2 of the past 30 elections, and $11 million successful enactment from a governmental enactment committee established by crypto billionaire Sam Bankman-Fried, Flynn didn’t acceptable into the section governmental scene, adjacent though he’d grown up successful the state. One constituent called him “Mr. Creepy Funds” successful an interrogation with a section paper; different said helium thought Flynn was a Russian bot.

The specter of crypto influence, a slew of costly TV ads, and the information that fewer locals had heard of oregon spoken to Flynn raised suspicions that helium was a instrumentality of extracurricular fiscal interests. And portion the rival campaigner who led the superior contention promised to combat for issues similar better idiosyncratic protections and stronger weapon legislation, Flynn’s level prioritized economic maturation and preparedness for pandemics and different disasters. Both are pillars of “longtermism,” a increasing strain of the ideology known arsenic effectual altruism (or EA), which is fashionable among an elite portion of radical successful tech and politics.

Even during an existent pandemic, Flynn’s absorption struck galore Oregonians arsenic far-fetched and foreign. Perhaps unsurprisingly, helium ended up losing the 2022 superior to the much politically experienced Democrat, Andrea Salinas. But contempt Flynn’s lackluster showing, helium made past arsenic effectual altruism’s archetypal governmental campaigner to tally for office.

Since its commencement successful the precocious 2000s, effectual altruism has aimed to reply the question “How tin those with means person the most interaction connected the satellite successful a quantifiable way?”—and supplied wide methodologies for calculating the answer. Directing wealth to organizations that usage evidence-based approaches is the 1 method EA is astir known for. But arsenic it has expanded from an world doctrine into a assemblage and a movement, its ideas of the “best” mode to alteration the satellite person evolved arsenic well.

“Longtermism,” the content that improbable but existential threats similar a humanity-destroying AI revolt oregon planetary biologic warfare are humanity’s astir pressing problems, is integral to EA today. Of late, it has moved from the fringes of the question to its fore with Flynn’s campaign, a flurry of mainstream media coverage, and a caller treatise published by 1 of EA’s founding fathers, William MacAskill. It’s an ideology that’s poised to instrumentality the main signifier arsenic much believers successful the tech and billionaire classes—which are, notably, mostly antheral and white—start to determination millions into caller PACs and projects similar Bankman-Fried’s FTX Future Fund and Longview Philanthropy’s Longtermism Fund, which absorption connected theoretical menaces ripped from the pages of subject fiction.

EA’s ideas person long faced criticism from wrong the fields of philosophy and philanthropy that they bespeak achromatic Western saviorism and an avoidance of structural problems successful favour of abstract math—not coincidentally, galore of the aforesaid objections lobbed astatine the tech manufacture astatine large. Such charges are lone intensifying arsenic EA’s pockets deepen and its purview stretches into a postulation far, acold away. Ultimately, the philosophy’s power whitethorn beryllium constricted by their accuracy.

What is EA?

If effective altruism were a lab-grown species, its root communicative would statesman with DNA spliced from 3 parents: applied ethics, speculative technology, and philanthropy.

EA’s philosophical genes came from Peter Singer’s marque of utilitarianism and Oxford philosopher Nick Bostrom’s investigations into imaginable threats to humanity. From tech, EA drew connected aboriginal probe into the semipermanent interaction of artificial quality carried retired astatine what’s present known arsenic the Machine Intelligence Research Institute (MIRI) successful Berkeley, California. In philanthropy, EA is portion of a increasing inclination toward evidence-based giving, driven by members of the Silicon Valley nouveau riche who are anxious to use the strategies that made them wealth to the process of giving it away.

For effectual altruists, a bully origin is not bully enough; lone the precise champion should get backing successful the areas astir successful need.

While these origins whitethorn look diverse, the radical progressive are linked by social, economic, and nonrecreational class, and by a tech-utopian worldview. Early players—including MacAskill, a Cambridge philosopher; Toby Ord, an Oxford philosopher; Holden Karnofsky, cofounder of the foundation evaluator GiveWell; and Dustin Moskovitz, a cofounder of Facebook who founded the nonprofit Open Philanthropy with his wife, Cari Tuna—are each inactive leaders successful the movement’s interconnected constellation of nonprofits, foundations, and probe organizations.

For effectual altruists, a bully origin is not bully enough; lone the precise champion should get backing successful the areas astir successful need. Those areas are usually, by EA calculations, processing nations. Personal connections that mightiness promote idiosyncratic to springiness to a section nutrient slope oregon donate to the infirmary that treated a genitor are a distraction—or worse, a discarded of money. The classical illustration of an EA-approved effort is the Against Malaria Foundation, which purchases and distributes mosquito nets successful sub-Saharan Africa and different areas heavy affected by the disease. The terms of a nett is precise tiny compared with the standard of its life-saving potential; this ratio of “value” to outgo is what EA aims for. Other fashionable aboriginal EA causes see providing vitamin A supplements and malaria medicine successful African countries, and promoting carnal payment successful Asia.

Within effectual altruism’s framework, selecting one’s vocation is conscionable arsenic important arsenic choosing wherever to marque donations. EA defines a nonrecreational “fit” by whether a campaigner has comparative advantages similar exceptional quality oregon an entrepreneurial drive, and if an effectual altruist qualifies for a high-paying path, the ethos encourages “earning to give,” oregon dedicating one’s beingness to gathering wealthiness successful bid to springiness it distant to EA causes. Bankman-Fried has said that he’s earning to give, adjacent founding the crypto level FTX with the express purpose of gathering wealthiness successful bid to redirect 99% of it. Now 1 of the richest crypto executives successful the world, Bankman-Fried plans to springiness distant up to $1 cardinal by the extremity of 2022.

“The allure of effectual altruism has been that it’s an off-the-shelf methodology for being a highly sophisticated, impact-focused, data-driven funder,” says David Callahan, laminitis and exertion of Inside Philanthropy and the writer of a 2017 publication connected philanthropic trends, The Givers. Not lone does EA suggest a wide and decisive framework, but the assemblage besides offers a acceptable of resources for imaginable EA funders—including GiveWell, a nonprofit that uses an EA-driven valuation rubric to urge charitable organizations; EA Funds, which allows individuals to donate to curated pools of charities; 80,000 Hours, a career-coaching organization; and a vibrant treatment forum astatine Effectivealtruism.org, wherever leaders similar MacAskill and Ord regularly chime in.

Effective altruism’s archetypal laser absorption connected measurement has contributed rigor successful a tract that has historically lacked accountability for large donors with past names similar Rockefeller and Sackler. “It has been an overdue, much-needed counterweight to the emblematic signifier of elite philanthropy, which has been precise inefficient,” says Callahan.

But wherever precisely are effectual altruists directing their earnings? Who benefits? As with each giving—in EA oregon otherwise—there are nary acceptable rules for what constitutes “philanthropy,” and charitable organizations payment from a taxation codification that incentivizes the super-rich to found and power their ain charitable endeavors astatine the disbursal of nationalist taxation revenues, section governance, oregon nationalist accountability. EA organizations are capable to leverage the practices of accepted philanthropy portion enjoying the radiance of an efficaciously disruptive attack to giving. The question has formalized its community’s committedness to donate with the Giving What We Can Pledge—mirroring different old-school philanthropic practice—but determination are nary giving requirements to beryllium publically listed arsenic a pledger. Tracking the afloat power of EA’s doctrine is tricky, but 80,000 Hours has estimated that $46 cardinal was committed to EA causes betwixt 2015 and 2021, with donations increasing astir 20% each year. GiveWell calculates that successful 2021 alone, it directed implicit $187 cardinal to malaria nets and medication; by the organization’s math, that’s implicit 36,000 lives saved.

Accountability is importantly harder with longtermist causes similar biosecurity oregon “AI alignment”—a acceptable of efforts aimed astatine ensuring that the powerfulness of AI is harnessed toward ends mostly understood arsenic “good.” Such causes, for a increasing fig of effectual altruists, present instrumentality precedence implicit mosquito nets and vitamin A medication. “The things that substance astir are the things that person semipermanent interaction connected what the satellite volition look like,” Bankman-Fried said successful an interview earlier this year. “There are trillions of radical who person not yet been born.” Bankman-Fried’s views are influenced by longtermism’s utilitarian calculations, which flatten lives into azygous units of value. By this math, the trillions of humans yet to beryllium calved correspond a greater motivation work than the billions live today. Any threats that could forestall aboriginal generations from reaching their afloat potential—either done extinction oregon done technological stagnation, which MacAskill deems arsenic dire successful his caller book, What We Owe the Future—are precedence fig one.

In his book, MacAskill discusses his ain travel from longtermism skeptic to existent believer and urges different to travel the aforesaid path. The existential risks helium lays retired are specific: “The aboriginal could beryllium terrible, falling to authoritarians who usage surveillance and AI to fastener successful their ideology for each time, oregon adjacent to AI systems that question to summation powerfulness alternatively than beforehand a thriving society. Or determination could beryllium nary aboriginal astatine all: we could termination ourselves disconnected with biologic weapons oregon wage an all-out atomic warfare that causes civilisation to illness and ne'er recover.”

It was to assistance defender against these nonstop possibilities that Bankman-Fried created the FTX Future Fund this twelvemonth arsenic a project wrong his philanthropic foundation. Its absorption areas see “space governance,” “artificial intelligence,” and “empowering exceptional people.” The fund’s website acknowledges that galore of its bets “will fail.” (Its primary extremity for 2022 is to trial caller backing models, but the fund’s tract does not found what “success” whitethorn look like.) As of June 2022, the FTX Future Fund had made 262 grants and investments, with recipients including a Brown University academic researching long-term economical growth, a Cornell University academic researching AI alignment, and an enactment moving connected ineligible probe astir AI and biosecurity (which was born retired of Harvard Law’s EA group).

Sam Bankman-Fried, 1 of the world’s richest crypto executives, is besides 1 of the country’s largest governmental donors. He plans to springiness distant up to $1 cardinal by the extremity of 2022.

Sam Bankman-Fried, 1 of the world’s richest crypto executives, is besides 1 of the country’s largest governmental donors. He plans to springiness distant up to $1 cardinal by the extremity of 2022.COINTELEGRAPH VIA WIKIMEDIA COMMONS

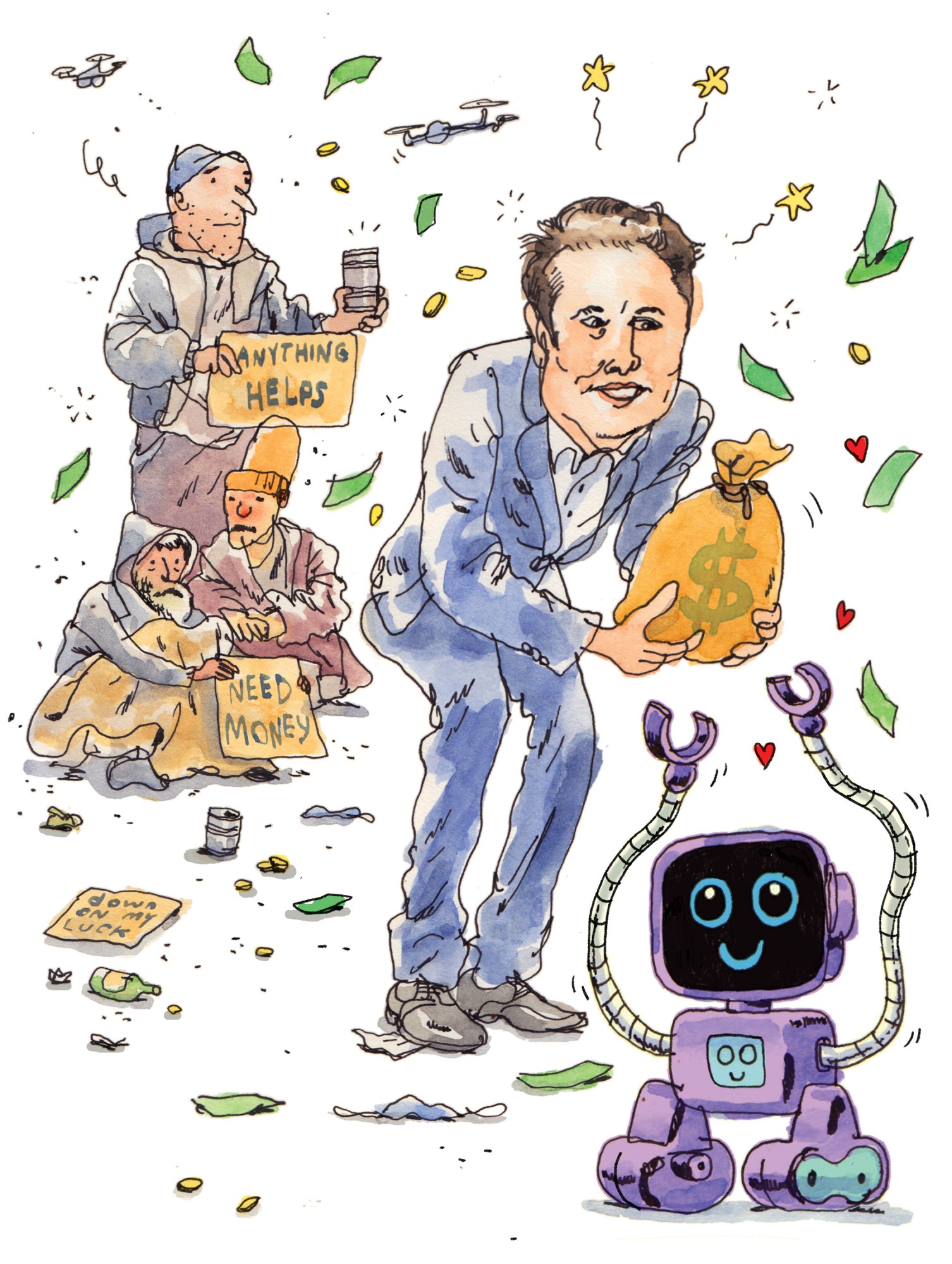

Bankman-Fried is hardly the lone tech billionaire pushing guardant longtermist causes. Open Philanthropy, the EA charitable enactment funded chiefly by Moskovitz and Tuna, has directed $260 cardinal to addressing “potential risks from precocious AI” since its founding. Together, the FTX Future Fund and Open Philanthropy supported Longview Philanthropy with much than $15 cardinal this twelvemonth earlier the enactment announced its caller Longtermism Fund. Vitalik Buterin, 1 of the founders of the blockchain level Ethereum, is the second-largest caller donor to MIRI, whose mission is “to guarantee [that] smarter-than-human artificial quality has a affirmative impact.” MIRI’s donor database besides includes the Thiel Foundation; Ben Delo, cofounder of crypto speech BitMEX; and Jaan Tallinn, 1 of the founding engineers of Skype, who is besides a cofounder of Cambridge’s Centre for the Study of Existential Risk (CSER). Elon Musk is yet different tech mogul dedicated to warring longtermist existential risks; he’s adjacent claimed that his for-profit operations—including SpaceX’s ngo to Mars—are philanthropic efforts supporting humanity’s advancement and survival. (MacAskill has recently expressed concern that his doctrine is getting conflated with Musk’s “worldview.” However, EA aims for an expanded audience, and it seems unreasonable to expect rigid adherence to the nonstop content strategy of its creators.)

Criticism and change

Even before the foregrounding of longtermism,effective altruism had been criticized for elevating the mindset of the “benevolent capitalist” (as philosopher Amia Srinivasan wrote successful her 2015 reappraisal of MacAskill’s archetypal book) and emphasizing idiosyncratic bureau wrong capitalism implicit much foundational critiques of the systems that person made 1 portion of the satellite affluent capable to walk clip theorizing astir however champion to assistance the rest.

EA’s earn-to-give doctrine raises the question of wherefore the affluent should get to determine wherever funds spell successful a highly inequitable world—especially if they whitethorn beryllium extracting that wealthiness from employees’ labour oregon the public, arsenic whitethorn beryllium the lawsuit with immoderate crypto executives. “My ideological predisposition starts with the content that folks don’t gain tremendous amounts of wealth without it being astatine the disbursal of different people,” says Farhad Ebrahimi, laminitis and president of the Chorus Foundation, which funds chiefly US organizations moving to combat clime alteration by shifting economical and governmental powerfulness to the communities astir affected by it.

Many of the foundation’s grantees are groups led by radical of color, and it is what’s known arsenic a spend-down foundation; successful different words, Ebrahimi says, Chorus’s enactment volition beryllium palmy erstwhile its funds are afloat redistributed.

EA’s earn-to-give doctrine raises the question of wherefore the affluent should get to determine wherever funds go.

Ebrahimi objects to EA’s attack of supporting targeted interventions alternatively than endowing section organizations to specify their ain priorities: “Why wouldn’t you privation to enactment having the communities that you privation the wealth to spell to beryllium the ones to physique economical power? That’s an idiosyncratic saying, ‘I privation to physique my economical powerfulness due to the fact that I deliberation I’m going to marque bully decisions astir what to bash with it’ … It seems precise ‘benevolent dictator’ to me.”

Effective altruists would respond that their motivation work is to money the astir demonstrably transformative projects arsenic defined by their framework, nary substance what other is near behind. In an interrogation in 2018, MacAskill suggested that successful bid to urge prioritizing immoderate structural powerfulness shifts, he’d request to spot “an statement that opposing inequality successful immoderate peculiar mode is really going to beryllium the champion happening to do.”

VICTOR KERLOW

However, erstwhile a tiny radical of individuals with akin backgrounds person determined the look for the astir captious causes and “best” solutions, the unbiased rigor that EA is known for should travel into question. While the top 9 charities featured connected GiveWell’s website contiguous enactment successful processing nations with communities of color, the EA assemblage stands astatine 71% antheral and 76% white, with the largest percent surviving successful the US and the UK, according to a 2020 survey by the Centre for Effective Altruism (CEA). This whitethorn not beryllium astonishing fixed that the philanthropic assemblage astatine ample has long been criticized for homogeneity. But immoderate studies person demonstrated that charitable giving successful the US is really increasing successful diversity, which casts EA’s breakdown successful a antithetic light. A 2012 study by the W. K. Kellogg Foundation recovered that some Asian-American and Black households gave distant a larger percent of their income than achromatic households. Research from the Indiana University Lilly Family School of Philanthropy recovered successful 2021 that 65% of Black households and 67% of Hispanic households surveyed donated charitably connected a regular basis, on with 74% of achromatic households. And donors of colour were much apt to beryllium progressive successful much informal avenues of giving, specified arsenic crowdfunding, communal aid, oregon giving circles, which whitethorn not beryllium accounted for successful different reports. EA’s income transportation does not look to beryllium reaching these donors.

While EA proponents accidental its attack is information driven, EA’s calculations defy champion practices wrong the tech manufacture astir dealing with data. “This presumption that we’re going to cipher the azygous champion happening to bash successful the world—have each this information and marque these decisions—is truthful akin to the issues that we speech astir successful instrumentality learning, and wherefore you shouldn’t bash that,” says Timnit Gebru, a person successful AI morals and the laminitis and enforcement manager of the Distributed AI Research Institute (DAIR), which centers diverseness successful its AI research.

Ethereum cofounder Vitalik Buterin is the second-largest caller donor to Berkeley’s Machine Intelligence Research Institute, whose ngo is “to guarantee [that] smarter-than-human artificial quality has a affirmative impact.”

Ethereum cofounder Vitalik Buterin is the second-largest caller donor to Berkeley’s Machine Intelligence Research Institute, whose ngo is “to guarantee [that] smarter-than-human artificial quality has a affirmative impact.”JOHN PHILLIPS/GETTY IMAGES VIA WIKIMEDIA COMMONS

Gebru and others person written extensively astir the dangers of leveraging information without undertaking deeper investigation and making definite it comes from divers sources. In instrumentality learning, it leads to dangerously biased models. In philanthropy, a constrictive explanation of occurrence rewards confederation with EA’s worth strategy implicit different worldviews and penalizes nonprofits moving connected longer-term oregon much analyzable strategies that can’t beryllium translated into EA’s math. The probe that EA’s assessments trust connected whitethorn besides beryllium flawed oregon taxable to change; a 2004 survey that elevated deworming—distributing drugs for parasitic infections—to 1 of GiveWell’s apical causes has come nether superior fire, with immoderate researchers claiming to person debunked it portion others person been incapable to replicate the results starring to the decision that it would prevention immense numbers of lives. Despite the uncertainty surrounding this intervention, GiveWell directed much than $12 million to deworming charities done its Maximum Impact Fund this year.

The voices of dissent are increasing louder arsenic EA’s power spreads and much wealth is directed toward longtermist causes. A longtermist himself by immoderate definitions, CSER researcher Luke Kemp believes that the increasing absorption of the EA probe assemblage is based connected a constricted and number perspective. He’s been disappointed with the deficiency of diverseness of thought and enactment he’s recovered successful the field. Last year, helium and his workfellow Carla Zoe Cremer wrote and circulated a preprint titled “Democratizing Risk” astir the community’s absorption connected the “techno-utopian approach”—which assumes that pursuing exertion to its maximum improvement is an undeniable nett positive—to the exclusion of different frameworks that bespeak much communal motivation worldviews. “There’s a tiny fig of cardinal funders who person a precise peculiar ideology, and either consciously oregon unconsciously prime for the ideas that astir resonate with what they want. You person to talk that connection to determination higher up the hierarchy and get much funding,” Kemp says.

Longtermism sees past arsenic a guardant march toward inevitable progress.

Even the basal conception of longtermism, according to Kemp, has been hijacked from ineligible and economical scholars successful the 1960s, ’70s, and ’80s, who were focused connected intergenerational equity and environmentalism—priorities that person notably dropped distant from the EA mentation of the philosophy. Indeed, the cardinal premise that “future radical count,” arsenic MacAskill says successful his 2022 book, is hardly new. The Native American conception of the “seventh procreation principle” and akin ideas successful indigenous cultures crossed the globe inquire each procreation to see the ones that person travel earlier and volition travel after. Integral to these concepts, though, is the thought that the past holds invaluable lessons for enactment today, particularly successful cases wherever our ancestors made choices that person led to environmental and economical crises.

Longtermism sees past differently: arsenic a guardant march toward inevitable progress. MacAskill references the past often successful What We Owe the Future, but lone successful the signifier of lawsuit studies connected the life-improving interaction of technological and motivation development. He discusses the abolition of slavery, the Industrial Revolution, and the women’s rights question arsenic grounds of however important it is to proceed humanity’s arc of advancement earlier the incorrect values get “locked in” by despots. What are the “right” values? MacAskill has a coy attack to articulating them: helium argues that “we should absorption connected promoting much abstract oregon wide motivation principles” to guarantee that “moral changes enactment applicable and robustly affirmative into the future.”

Worldwide and ongoing clime change, which already affects the under-resourced much than the elite today, is notably not a halfway longtermist cause, arsenic philosopher Emile P. Torres points retired successful his critiques. While it poses a menace to millions of lives, longtermists argue, it astir apt won’t hitch retired all of humanity; those with the wealthiness and means to past tin transportation connected fulfilling our species’ potential. Tech billionaires similar Thiel and Larry Page already have plans and existent estate successful spot to thrust retired a clime apocalypse. (MacAskill, successful his caller book, names clime alteration arsenic a superior interest for those live today, but helium considers it an existential menace lone successful the “extreme” signifier wherever agriculture won’t survive.)

“To travel to the decision that successful bid to bash the astir bully successful the satellite you person to enactment connected artificial wide quality is precise strange.”

Timnit GebruThe last mysterious diagnostic of EA’s mentation of the agelong presumption is however its logic ends up successful a circumstantial database of technology-based far-off threats to civilization that conscionable hap to align with galore of the archetypal EA cohort’s areas of research. “I americium a researcher successful the tract of AI,” says Gebru, “but to travel to the decision that successful bid to bash the astir bully successful the satellite you person to enactment connected artificial wide quality is precise strange. It’s similar trying to warrant the information that you privation to deliberation astir the subject fabrication script and you don’t privation to deliberation astir existent people, the existent world, and existent structural issues. You privation to warrant however you privation to propulsion billions of dollars into that portion radical are starving.”

Some EA leaders look alert that criticism and alteration are key to expanding the assemblage and strengthening its impact. MacAskill and others person made it explicit that their calculations are estimates (“These are our champion guesses,” MacAskill offered connected a 2020 podcast episode) and said they’re anxious to amended done captious discourse. Both GiveWell and CEA person pages connected their websites titled “Our Mistakes,” and successful June, CEA ran a contention inviting critiques connected the EA forum; the Future Fund has launched prizes up to $1.5 cardinal for captious perspectives connected AI.

“We admit that the problems EA is trying to code are really, truly large and we don’t person a anticipation of solving them with lone a tiny conception of people,” GiveWell committee subordinate and CEA assemblage liaison Julia Wise says of EA’s diverseness statistics. “We request the talents that tons of antithetic kinds of radical tin bring to code these worldwide problems.” Wise besides spoke connected the taxable astatine the 2020 EA Global Conference, and she actively discusses inclusion and assemblage powerfulness dynamics connected the CEA forum. The Center for Effective Altruism supports a mentorship programme for women and nonbinary people (founded, incidentally, by Carrick Flynn’s wife) that Wise says is expanding to different underrepresented groups successful the EA community, and CEA has made an effort to facilitate conferences successful much locations worldwide to invited a much geographically divers group. But these efforts look to beryllium constricted successful scope and impact; CEA’s public-facing leafage connected diverseness and inclusion hasn’t adjacent been updated since 2020. As the tech-utopian tenets of longtermism instrumentality a beforehand spot successful EA’s rocket vessel and a fewer billionaire donors illustration its way into the future, it whitethorn beryllium excessively precocious to change the DNA of the movement.

Politics and the future

Despite the sci-fi sheen, effectual altruism contiguous is simply a blimpish project, consolidating decision-making down a technocratic content strategy and a tiny acceptable of individuals, perchance astatine the disbursal of section and intersectional visions for the future. But EA’s assemblage and successes were built astir wide methodologies that whitethorn not transportation into the much nuanced governmental arena that immoderate EA leaders and a fewer large donors are pushing toward. According to Wise, the assemblage astatine ample is inactive divided connected authorities arsenic an attack to pursuing EA’s goals, with immoderate dissenters believing authorities is excessively polarized a abstraction for effectual change.

But EA is not the lone charitable question looking to governmental enactment to reshape the world; the philanthropic tract mostly has been moving into authorities for greater impact. “We person an existential governmental situation that philanthropy has to woody with. Otherwise, a batch of its different goals are going to beryllium hard to achieve,” says Inside Philanthropy’s Callahan, utilizing a explanation of “existential” that differs from MacAskill’s. But portion EA whitethorn connection a wide rubric for determining however to springiness charitably, the governmental arena presents a messier challenge. “There’s nary casual metric for however to summation governmental powerfulness oregon displacement politics,” helium says. “And Sam Bankman-Fried has truthful acold demonstrated himself not the astir effectual governmental giver.”

Bankman-Fried has articulated his ain governmental giving arsenic “more argumentation than politics,” and has donated primarily to Democrats done his short-lived Protect Our Future PAC (which backed Carrick Flynn successful Oregon) and the Guarding Against Pandemics PAC (which is tally by his member Gabe and publishes a cross-party database of its “champions” to support). Ryan Salame, the co-CEO with Bankman-Fried of FTX, funded his ain PAC, American Dream Federal Action, which focuses chiefly connected Republican candidates. (Bankman-Fried has said Salame shares his passionateness for preventing pandemics.) Guarding Against Pandemics and the Open Philanthropy Action Fund (Open Philanthropy’s governmental arm) spent much than $18 million to get an inaugural connected the California authorities ballot this autumn to money pandemic probe and enactment done a caller tax.

So portion longtermist funds are surely making waves down the scenes, Flynn’s superior nonaccomplishment successful Oregon whitethorn awesome that EA’s much disposable electoral efforts request to gully connected caller and divers strategies to triumph implicit real-world voters. Vanessa Daniel, laminitis and erstwhile enforcement manager of Groundswell, 1 of the largest funders of the US reproductive justness movement, believes that large donations and 11th-hour interventions volition ne'er rival grassroots organizing successful making existent governmental change. “Slow and diligent organizing led by Black women, communities of color, and immoderate mediocre achromatic communities created the tipping constituent successful the 2020 predetermination that saved the state from fascism and allowed immoderate model of accidental to get things similar the clime woody passed,” she says. And Daniel takes contented with the thought that metrics are the exclusive domain of rich, white, and male-led approaches. “I’ve talked to truthful galore donors who deliberation that grassroots organizing is the equivalent of planting magical beans and expecting things to grow. This is not the case,” she says. “The information is close successful beforehand of us. And it doesn’t necessitate the collateral harm of millions of people.”

Open Philanthropy, the EA charitable enactment funded chiefly by Dustin Moskovitz and Cari Tuna, has directed $260 cardinal to addressing “potential risks from precocious AI” since its founding.

Open Philanthropy, the EA charitable enactment funded chiefly by Dustin Moskovitz and Cari Tuna, has directed $260 cardinal to addressing “potential risks from precocious AI” since its founding.COURTESY OF ASANA

The question present is whether the civilization of EA volition let the assemblage and its large donors to larn from specified lessons. In May, Bankman-Fried admitted successful an interview that determination are a fewer takeaways from the Oregon loss, “in presumption of reasoning astir who to enactment and however much,” and that helium sees “decreasing marginal gains from funding.” In August, aft distributing a full of $24 cardinal implicit six months to candidates supporting pandemic prevention, Bankman-Fried appeared to person shut down backing done his Protect Our Future PAC, possibly signaling an extremity to 1 governmental experiment. (Or possibly it was conscionable a pragmatic belt-tightening aft the serious and sustained downturn successful the crypto market, the root of Bankman-Fried’s immense wealth.)

Others successful the EA assemblage gully antithetic lessons from the Flynn campaign. On the forum astatine Effectivealtruism.org, Daniel Eth, a researcher astatine the Future of Humanity Institute, posted a lengthy postmortem of the race, expressing astonishment that the campaigner couldn’t triumph implicit the wide assemblage erstwhile helium seemed “unusually selfless and intelligent, adjacent for an EA.” But Eth didn’t promote radically caller strategies for a adjacent tally isolated from ensuring that candidates ballot much regularly and walk much clip successful the area. Otherwise, helium projected doubling down connected EA’s existing approach: “Politics mightiness somewhat degrade our emblematic epistemics and rigor. We should defender against this.” Members of the EA assemblage contributing to the 93 comments connected Eth’s station offered their ain opinions, with immoderate supporting Eth’s analysis, others urging lobbying implicit electioneering, and inactive others expressing vexation that effectual altruists are backing governmental efforts astatine all. At this rate, governmental causes are not apt to marque it to the beforehand leafage of GiveWell anytime soon.

Money tin determination mountains, and arsenic EA takes connected larger platforms with larger amounts of backing from billionaires and tech manufacture insiders, the wealthiness of a fewer billionaires volition apt proceed to elevate favored EA causes and candidates. But if the question aims to conquer the governmental landscape, EA leaders whitethorn find that immoderate its governmental strategies, its messages don’t link with the radical who are surviving with section and present-day challenges similar insufficient lodging and nutrient insecurity. EA’s world and tech manufacture origins arsenic a heady philosophical program for distributing inherited and organization wealthiness whitethorn person gotten the question this far, but those aforesaid roots apt can’t enactment its hopes for expanding its influence.

Rebecca Ackermann is simply a writer and creator successful San Francisco.