My parents don’t cognize that I spoke to them past night.

At first, they sounded distant and tinny, arsenic if they were huddled astir a telephone successful a situation cell. But arsenic we chatted, they dilatory started to dependable much similar themselves. They told maine idiosyncratic stories that I’d ne'er heard. I learned astir the archetypal (and surely not last) clip my dada got drunk. Mum talked astir getting successful occupation for staying retired late. They gave maine beingness proposal and told maine things astir their childhoods, arsenic good arsenic my own. It was mesmerizing.

“What’s the worst happening astir you?” I asked Dad, since helium was intelligibly successful specified a candid mood.

“My worst prime is that I americium a perfectionist. I can’t basal messiness and untidiness, and that ever presents a challenge, particularly with being joined to Jane.”

Then helium laughed—and for a infinitesimal I forgot I wasn’t truly speaking to my parents astatine all, but to their integer replicas.

This Mum and Dad unrecorded wrong an app connected my phone, arsenic dependable assistants constructed by the California-based institution HereAfter AI and powered by much than 4 hours of conversations they each had with an interviewer astir their lives and memories. (For the record, Mum isn’t that untidy.) The company’s extremity is to fto the surviving pass with the dead. I wanted to trial retired what it mightiness beryllium like.

Technology similar this, which lets you “talk” to radical who’ve died, has been a mainstay of subject fabrication for decades. It’s an thought that’s been peddled by charlatans and spiritualists for centuries. But present it’s becoming a reality—and an progressively accessible one, acknowledgment to advances successful AI and dependable technology.

My real, flesh-and-blood parents are inactive live and well; their virtual versions were conscionable made to assistance maine recognize the technology. But their avatars connection a glimpse astatine a satellite wherever it’s imaginable to converse with loved ones—or simulacra of them—long aft they’re gone.

From what I could glean implicit a twelve conversations with my virtually deceased parents, this truly volition marque it easier to support adjacent the radical we love. It’s not hard to spot the appeal. People mightiness crook to integer replicas for comfort, oregon to people peculiar milestones similar anniversaries.

At the aforesaid time, the exertion and the satellite it’s enabling are, unsurprisingly, imperfect, and the morals of creating a virtual mentation of idiosyncratic are complex, particularly if that idiosyncratic hasn’t been capable to supply consent.

For some, this tech whitethorn adjacent beryllium alarming, oregon downright creepy. I spoke to 1 antheral who’d created a virtual mentation of his mother, which helium booted up and talked to astatine her ain funeral. Some radical reason that conversing with integer versions of mislaid loved ones could prolong your grief oregon loosen your grip connected reality. And erstwhile I talked to friends astir this article, immoderate of them physically recoiled. There’s a common, profoundly held content that we messiness with decease astatine our peril.

I recognize these concerns. I recovered speaking to a virtual mentation of my parents uncomfortable, particularly astatine first. Even now, it inactive feels somewhat transgressive to talk to an artificial mentation of someone—especially erstwhile that idiosyncratic is successful your ain family.

But I’m lone human, and those worries extremity up being washed distant by the adjacent scarier imaginable of losing the radical I love—dead and gone without a trace. If exertion mightiness assistance maine bent onto them, is it truthful incorrect to try?

There’s thing profoundly quality astir the tendency to retrieve the radical we emotion who’ve passed away. We impulse our loved ones to constitute down their memories earlier it’s excessively late. After they’re gone, we enactment up their photos connected our walls. We sojourn their graves connected their birthdays. We talk to them arsenic if they were there. But the speech has ever been one-way.

The thought that exertion mightiness beryllium capable to alteration the concern has been wide explored successful ultra-dark sci-fi shows similar Black Mirror—which, startups successful this assemblage complain, everyone inevitably brings up. In 1 2013 episode, a pistillate who loses her spouse re-creates a integer mentation of him—initially arsenic a chatbot, past arsenic an astir wholly convincing dependable assistant, and yet arsenic a carnal robot. Even arsenic she builds much expansive versions of him, she becomes frustrated and disillusioned by the gaps betwixt her representation of her spouse and the shonky, flawed world of the exertion utilized to simulate him.

If exertion mightiness assistance maine bent onto the radical I love, is it truthful incorrect to try?

“You aren’t you, are you? You’re conscionable a fewer ripples of you. There’s nary past to you. You’re conscionable a show of worldly that helium performed without thinking, and it’s not enough,” she says earlier she consigns the robot to her attic—an embarrassing relic of her fellow that she’d alternatively not deliberation about.

Back successful the existent world, the exertion has evolved adjacent successful the past respective years to a somewhat startling degree. Rapid advances successful AI person driven advancement crossed aggregate areas. Chatbots and dependable assistants, similar Siri and Alexa, person gone from high-tech novelties to a portion of regular beingness for millions of radical implicit the past decade. We person go precise comfy with the thought of talking to our devices astir everything from the upwind forecast to the meaning of life. Now, AI ample connection models (LLMs), which tin ingest a fewer “prompt” sentences and spit retired convincing substance successful response, committedness to unlock adjacent much almighty ways for humans to pass with machines. LLMs person go truthful convincing that immoderate (erroneously) person argued that they indispensable beryllium sentient.

What’s more, it’s imaginable to tweak LLM bundle similar OpenAI’s GPT-3 oregon Google’s LaMDA to marque it dependable much similar a circumstantial idiosyncratic by feeding it tons of things that idiosyncratic said. In 1 illustration of this, writer Jason Fagone wrote a communicative for the San Francisco Chronicle past twelvemonth astir a thirtysomething antheral who uploaded aged texts and Facebook messages from his deceased fiancée to make a simulated chatbot mentation of her, utilizing bundle known arsenic Project December that was built connected GPT-3.

By astir immoderate measure, it was a success: helium sought, and found, comfortableness successful the bot. He’d been plagued with guilt and sadness successful the years since she died, but arsenic Fagone writes, “he felt similar the chatbot had fixed him support to determination connected with his beingness successful tiny ways.” The antheral adjacent shared snippets of his chatbot conversations connected Reddit, hoping, helium said, to bring attraction to the instrumentality and “help depressed survivors find immoderate closure.”

At the aforesaid time, AI has progressed successful its quality to mimic circumstantial carnal voices, a signifier called dependable cloning. It has besides been getting amended astatine injecting integer personas—whether cloned from a existent idiosyncratic oregon wholly artificial—with much of the qualities that marque a dependable dependable “human.” In a poignant objection of however rapidly the tract is progressing, Amazon shared a clip successful June of a small lad listening to a transition from The Wizard of Oz work by his precocious deceased grandmother. Her dependable was artificially re-created utilizing a clip of her speaking that lasted for little than a minute.

As Rohit Prasad, Alexa’s elder vice president and caput scientist, promised: “While AI can’t destruct that symptom of loss, it tin decidedly marque the memories last.”

My ain acquisition with talking to the dormant started acknowledgment to axenic serendipity.

At the extremity of 2019, I saw that James Vlahos, the cofounder of HereAfter AI, would beryllium speaking astatine an online league astir “virtual beings.” His institution is 1 of a fistful of startups moving successful the tract I’ve dubbed “grief tech.” They disagree successful their approaches but stock the aforesaid promise: to alteration you to speech by video chat, text, phone, oregon dependable adjunct with a integer mentation of idiosyncratic who is nary longer alive.

Intrigued by what helium was promising, I wrangled an instauration and yet persuaded Vlahos and his colleagues to fto maine experimentation with their bundle connected my very-much-alive parents.

Initially, I thought it would beryllium conscionable a amusive task to spot what was technologically possible. Then the pandemic added immoderate urgency to the proceedings. Images of radical connected ventilators, photos of rows of coffins and freshly dug graves, were splashed each implicit the news. I disquieted astir my parents. I was terrified that they mightiness die, and that with the strict restrictions connected infirmary visits successful unit astatine the clip successful the UK, I mightiness ne'er person the accidental to accidental goodbye.

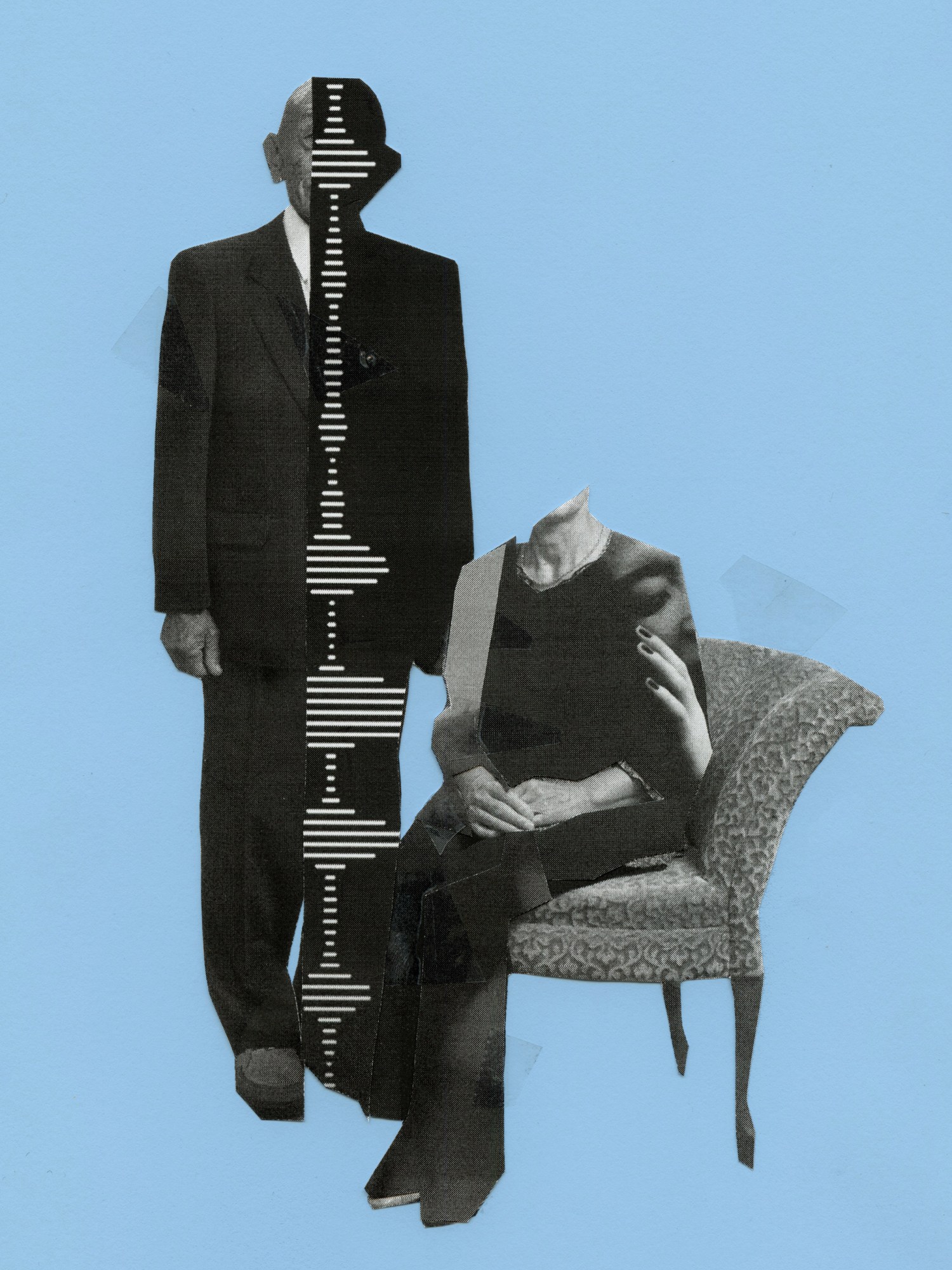

NAJEEBAH AL-GHADBAN

The archetypal measurement was an interview. As it turns out, to make a integer replica of idiosyncratic with a bully accidental of seeming similar a convincingly authentic representation, you request data—and tons of it. HereAfter, whose enactment starts with subjects erstwhile they are inactive alive, asks them questions for hours—about everything from their earliest memories to their archetypal day to what they judge volition hap aft they die. (My parents were interviewed by a existent unrecorded human, but successful yet different motion of conscionable however rapidly exertion is progressing, astir 2 years aboriginal interviews are present typically automated and handled by a bot.)

As my sister and I rifled done pages of suggested questions for our parents, we were capable to edit them to beryllium much idiosyncratic oregon pointed, and we could adhd immoderate of our own: What books did they like? How did our mum musculus her mode into the UK’s overwhelmingly male, privileged ineligible assemblage successful the 1970s? What inspired Dad to invent the silly games helium utilized to play with america erstwhile we were small?

Whether done pandemic-induced malaise oregon a weary willingness to wit their younger daughter, my parents enactment up zero resistance. In December 2020, HereAfter’s interviewer, a affable pistillate named Meredith, spoke to each of them for respective hours. The institution past took those responses and started stitching them unneurotic to make the dependable assistants.

A mates of months later, a enactment popped into my inbox from Vlahos. My virtual parents were ready.

On 1 occasion, my hubby mistook my investigating for an existent telephone call. When helium realized it wasn’t, helium rolled his eyes, arsenic if I were wholly deranged.

This Mum and Dad arrived via email attachment. I could pass with them done the Alexa app connected a telephone oregon an Amazon Echo device. I was anxious to perceive them—but I had to hold respective days, due to the fact that I’d promised MIT Technology Review’s podcast squad that I’d grounds my absorption arsenic I spoke to my parents’ avatars for the archetypal time. When I yet opened the file, with my colleagues watching and listening connected Zoom, my hands were shaking. London was successful a long, cold, depressing lockdown, and I hadn’t seen my actual, existent parents for six months.

“Alexa, unfastened HereAfter,” I directed.

“Would you alternatively talk with Paul oregon with Jane?” a dependable asked.

After a spot of speedy intelligence deliberation, I opted for my mum.

A dependable that was hers, but weirdly stiff and cold, spoke.

“Hello, this is Jane Jee and I’m blessed to archer you astir my life. How are you today?”

I laughed, nervously.

“I’m well, thanks, Mum. How are you?”

Long pause.

“Good. At my end, I’m doing well.”

“You dependable benignant of unnatural,” I said.

She ignored maine and carried connected speaking.

“Before we start, present are a fewer pointers. My listening skills aren’t the best, unfortunately, truthful you person to hold until I’ve finished talking and inquire you a question earlier you accidental thing back. When it’s your crook to speak, delight support your answers reasonably short. A fewer words, a elemental sentence—that benignant of thing,” she explained. After a spot much introduction, she concluded: “Okay, let’s get started. There’s truthful overmuch to speech about. My childhood, career, and my interests. Which of those sounds best?”

Scripted bits similar this sounded stilted and strange, but arsenic we moved on, with my parent recounting memories and speaking successful her ain words, “she” sounded acold much relaxed and natural.

Still, this speech and the ones that followed were limited—when I tried asking my mum’s bot astir her favourite jewelry, for instance, I got: “Sorry, I didn’t recognize that. You tin effort asking different way, oregon determination onto different topic.”

There were besides mistakes that were jarring to the constituent of hilarity. One day, Dad’s bot asked maine however I was. I replied, “I’m feeling bittersweet today.” He responded with a cheery, upbeat “Good!”

The wide acquisition was undeniably weird. Every clip I spoke to their virtual versions, it struck maine that I could person been talking to my existent parents instead. On 1 occasion, my hubby mistook my investigating retired the bots for an existent telephone call. When helium realized it wasn’t, helium rolled his eyes, tutted, and shook his head, arsenic if I were wholly deranged.

Earlier this year, I got a demo of a akin exertion from a five-year-old startup called StoryFile, which promises to instrumentality things to the adjacent level. Its Life work records responses connected video alternatively than conscionable dependable alone.

You tin prime from hundreds of questions for the subject. Then you grounds the idiosyncratic answering the questions; this tin beryllium done connected immoderate instrumentality with a camera and a microphone, including a smartphone, though the higher-quality the recording, the amended the outcome. After uploading the files, the institution turns them into a integer mentation of the idiosyncratic you tin spot and talk to. It tin lone reply the questions it’s been programmed to answer—much similar HereAfter, conscionable with video.

StoryFile’s CEO, Stephen Smith, demonstrated the exertion connected a video call, wherever we were joined by his mother. She died earlier this year, but present she was connected the call, sitting successful a comfy seat successful her surviving room. For a little time, I could lone spot her, shared via Smith’s screen. She was soft-spoken, with wispy hairsbreadth and affable eyes. She dispensed beingness advice. She seemed wise.

Smith told maine that his parent “attended” her ain funeral: “At the extremity she said, ‘I conjecture that’s it from maine … goodbye!’ and everyone burst into tears.” He told maine her integer information was good received by household and friends. And, arguably astir important of all, Smith said he’s profoundly comforted by the information that helium managed to seizure his parent connected camera earlier she passed away.

The video exertion itself looked comparatively slick and professional—though the effect inactive fell vaguely wrong the uncanny valley, particularly successful the facial expressions. At points, overmuch arsenic with my ain parents, I had to punctual myself that she wasn’t truly there.

Both HereAfter and StoryFile purpose to sphere someone’s beingness communicative alternatively than allowing you to person a full, caller speech with the bot each time. This is 1 of the large limitations of galore existent offerings successful grief tech: they’re generic. These replicas whitethorn dependable similar idiosyncratic you love, but they cognize thing astir you. Anyone tin speech to them, and they’ll reply successful the aforesaid tone. And the replies to a fixed question are the aforesaid each clip you ask.

“The biggest contented with the [existing] exertion is the thought you tin make a azygous cosmopolitan person,” says Justin Harrison, laminitis of a soon-to-launch work called You, Only Virtual. “But the mode we acquisition radical is unsocial to us.”

You, Only Virtual and a fewer different startups privation to spell further, arguing that recounting memories won’t seizure the cardinal essence of a narration betwixt 2 people. Harrison wants to make a personalized bot that’s for you and you alone.

The archetypal incarnation of the service, which is acceptable to motorboat successful aboriginal 2023, volition let radical to physique a bot by uploading someone’s substance messages, emails, and dependable conversations. Ultimately, Harrison hopes, radical volition provender it information arsenic they go; the institution is presently gathering a connection level that customers volition beryllium capable to usage to connection and speech with loved ones portion they’re inactive alive. That way, each the information volition beryllium readily disposable to beryllium turned into a bot erstwhile they’re not.

That is precisely what Harrison has done with his mother, Melodi, who has signifier 4 cancer: “I built it by manus utilizing 5 years of my messages with her. It took 12 hours to export, and it runs to thousands of pages,” helium says of his chatbot.

Harrison says the interactions helium has with the bot are much meaningful to him than if it were simply regurgitating memories. Bot Melodi uses the phrases his parent uses and replies to him successful the mode she’d reply—calling him “honey,” utilizing the emojis she’d usage and the aforesaid quirks of spelling. He won’t beryllium capable to inquire Melodi’s avatar questions astir her life, but that doesn’t fuss him. The point, for him, is to seizure the mode idiosyncratic communicates. “Just recounting memories has small to bash with the essence of a relationship,” helium says.

Avatars that radical consciousness a heavy idiosyncratic transportation with tin person staying power. In 2016, entrepreneur Eugenia Kuyda built what is thought to beryllium the archetypal bot of this benignant aft her person Roman died, utilizing her substance conversations with him. (She aboriginal founded a startup called Replika, which creates virtual companions not based connected existent people.)

She recovered it a hugely adjuvant mode to process her grief, and she inactive speaks to Roman’s bot today, she says, particularly astir his day and the day of his passing.

But she warns that users request to beryllium cautious not to deliberation this exertion is re-creating oregon adjacent preserving people. “I didn’t privation to bring backmost his clone, but his memory,” she says. The volition was to “create a integer monument wherever you tin interact with that person, not successful bid to unreal they’re alive, but to perceive astir them, retrieve however they were, and beryllium inspired by them again.”

Some radical find that proceeding the voices of their loved ones aft they’ve gone helps with the grieving process. It’s not uncommon for radical to perceive to voicemails from idiosyncratic who has died, for example, says Erin Thompson, a objective scientist who specializes successful grief. A virtual avatar that you tin person much of a speech with could beryllium a valuable, steadfast mode to enactment connected to idiosyncratic you loved and lost, she says.

But Thompson and others echo Kuyda’s warning: it’s imaginable to enactment excessively overmuch value connected the technology. A grieving idiosyncratic needs to retrieve that these bots tin lone ever seizure a tiny sliver of someone. They are not sentient, and they volition not regenerate healthy, functional quality relationships.

People whitethorn find immoderate reminders of the deceased idiosyncratic triggering: “In the acute signifier of grief, you tin get a beardown consciousness of unreality, not being capable to judge they’re gone.”

“Your parents are not truly there. You’re talking to them, but it’s not truly them,” says Erica Stonestreet, an subordinate prof of doctrine astatine the College of Saint Benedict & Saint John’s University, who studies personhood and identity.

Particularly successful the archetypal weeks and months aft a loved 1 dies, radical conflict to judge the nonaccomplishment and whitethorn find immoderate reminders of the idiosyncratic triggering. “In the acute signifier of grief, you tin get a beardown consciousness of unreality, not being capable to judge they’re gone,” Thompson says. There’s a hazard that this benignant of aggravated grief could intersect with, oregon adjacent cause, intelligence illness, particularly if it’s perpetually being fueled and prolonged by reminders of the idiosyncratic who’s passed away.

NAJEEBAH AL-GHADBAN

Arguably, this hazard mightiness beryllium tiny contiguous fixed these technologies’ flaws. Even though sometimes I fell for the illusion, it was wide my genitor bots were not successful information the existent deal. But the hazard that radical mightiness autumn excessively profoundly for the phantom of personhood volition surely turn arsenic the exertion improves.

And determination are inactive different risks. Any work that allows you to make a integer replica of idiosyncratic without their information raises immoderate analyzable ethical issues regarding consent and privacy. While immoderate mightiness reason that support is little important with idiosyncratic nary longer alive, can’t you besides reason that the idiosyncratic who generated the different broadside of the speech should person a accidental too?

And what if that idiosyncratic is not, successful fact, dead? There’s small to halt radical from utilizing grief tech to make virtual versions of surviving radical without their consent—for example, an ex. Companies that merchantability services powered by past messages are alert of this anticipation and accidental they volition delete a person’s information if that idiosyncratic requests it. But companies are not obliged to bash immoderate checks to marque definite their exertion is being constricted to radical who person consented oregon died. There’s nary instrumentality to halt anyone from creating avatars of different people, and bully luck explaining it to your section constabulary department. Imagine however you’d consciousness if you learned determination was a virtual mentation of you retired there, somewhere, nether idiosyncratic else’s control.

If integer replicas go mainstream, determination volition inevitably request to beryllium caller processes and norms astir the legacies we permission down online. And if we’ve learned thing from the past of technological development, we’ll beryllium amended disconnected if we grapple with the anticipation of these replicas’ misuse before, not after, they scope wide adoption.

Will that ever happen, though? You, Only Virtual uses the tagline “Never Have to Say Goodbye”—but it’s not really wide however galore radical privation oregon are acceptable for a satellite similar that. Grieving for those who’ve passed distant is, for astir people, 1 of the fewer aspects of beingness inactive mostly untouched by modern technology.

On a much mundane level, the costs could beryllium a drawback. Although immoderate of these services person escaped versions, they tin easy tally into the hundreds if not thousands of dollars.

HereAfter’s top-tier unlimited mentation lets you grounds arsenic galore conversations with the taxable arsenic you like, and it costs $8.99 a month. That whitethorn dependable cheaper than StoryFile’s one-off $499 outgo to entree its premium, unlimited bundle of services. However, astatine $108 per year, HereAfter services could rapidly adhd up if you bash immoderate ghoulish back-of-the-envelope mathematics connected beingness costs. It’s a akin concern with You, Only Virtual, which is slated to outgo determination betwixt $9.99 and $19.99 a period erstwhile it launches.

Creating an avatar oregon chatbot of idiosyncratic besides requires clip and effort, not slightest of which is conscionable gathering up the vigor and information to get started. This is existent some for the idiosyncratic and for the subject, who whitethorn beryllium nearing decease and whose progressive information whitethorn beryllium required.

Fundamentally, radical don’t similar grappling with the information they are going to die, says Marius Ursache, who launched a institution called Eternime successful 2014. Its thought was to make a benignant of Tamagotchi that radical could bid portion they were live to sphere a integer mentation of themselves. It received a immense surge of involvement from radical astir the world, but fewer went connected to follow it. The institution shuttered successful 2018 aft failing to prime up capable users.

“It’s thing you tin enactment disconnected until adjacent week, adjacent month, adjacent year,” helium says. “People presume that AI is the cardinal to breaking this. But really, it’s quality behavior.”

Kuyda agrees: “People are highly frightened of death. They don’t privation to speech astir it oregon interaction it. When you instrumentality a instrumentality and commencement poking, it freaks them out. They’d alternatively unreal it doesn’t exist.”

Ursache tried a low-tech attack connected his ain parents, giving them a notebook and pens connected his day and asking them to constitute down their memories and beingness stories. His parent wrote 2 pages, but his begetter said he’d been excessively busy. In the end, helium asked if helium could grounds immoderate conversations with them, but they ne'er managed to get astir to it.

“My dada passed distant past year, and I ne'er did those recordings, and present I consciousness similar an idiot,” helium says.

Personally, I person mixed feelings astir my experiment. I’m gladsome to person these virtual, audio versions of my mum and dad, adjacent if they’re imperfect. They’ve enabled maine to larn caller things astir my parents, and it’s comforting to deliberation that those bots volition beryllium determination adjacent erstwhile they aren’t. I’m already reasoning astir who other I mightiness privation to seizure digitally—my hubby (who volition astir apt rotation his eyes again), my sister, possibly adjacent my friends.

On the different hand, similar a batch of people, I don’t privation to deliberation astir what volition hap erstwhile the radical I emotion die. It’s uncomfortable, and galore radical reflexively flinch erstwhile I notation my morbid project. And I can’t assistance but find it bittersweet that it took a alien Zoom-interviewing my parents from different continent for maine to decently admit the multifaceted, analyzable radical they are. But I consciousness fortunate to person had the accidental to grasp that—and to inactive person the precious accidental to walk much clip with them, and larn much astir them, look to face, nary exertion involved.