When Greg unboxed a caller Roomba robot vacuum cleaner successful December 2019, helium thought helium knew what helium was getting into.

He would let the preproduction trial mentation of iRobot’s Roomba J bid instrumentality to roam astir his house, fto it cod each sorts of information to assistance amended its artificial intelligence, and supply feedback to iRobot astir his idiosyncratic experience.

He had done this each before. Outside of his time occupation arsenic an technologist astatine a bundle company, Greg had been beta-testing products for the past decade. He estimates that he’s tested implicit 50 products successful that time—everything from sneakers to astute location cameras.

“I truly bask it,” helium says. “The full thought is that you get to larn astir thing new, and hopefully beryllium progressive successful shaping the product, whether it’s making a better-quality merchandise oregon really defining features and functionality.”

But what Greg didn’t know—and does not judge helium consented to—was that iRobot would stock trial users’ information successful a sprawling, planetary information proviso chain, wherever everything (and each person) captured by the devices’ front-facing cameras could beryllium seen, and possibly annotated, by low-paid contractors extracurricular the United States who could screenshot and stock images astatine their will.

Greg, who asked that we place him lone by his archetypal sanction due to the fact that helium signed a nondisclosure statement with iRobot, is not the lone trial idiosyncratic who feels dismayed and betrayed.

Nearly a twelve radical who participated successful iRobot’s information postulation efforts betwixt 2019 and 2022 person travel guardant successful the weeks since MIT Technology Review published an investigation into however the institution uses images captured from wrong existent homes to bid its artificial intelligence. The participants person shared akin concerns astir however iRobot handled their data—and whether those practices conform with the company's ain information extortion promises. After all, the agreements spell some ways, and whether oregon not the institution legally violated its promises, the participants consciousness misled.

“There is simply a existent interest astir whether the institution is being deceptive if radical are signing up for this benignant of highly invasive benignant of surveillance and ne'er afloat recognize … what they’re agreeing to,” says Albert Fox Cahn, the enforcement manager of the Surveillance Technology Oversight Project.

The company’s nonaccomplishment to adequately support trial idiosyncratic information feels similar “a wide breach of the statement connected their side,” Greg says. It’s “a nonaccomplishment … [and] besides a usurpation of trust.”

Now, helium wonders, “where is the accountability?”

The blurry enactment betwixt testers and consumers

Last period MIT Technology Review revealed however iRobot collects photos and videos from the homes of trial users and employees and shares them with information annotation companies, including San Francisco–based Scale AI, which prosecute far-flung contractors to statement the information that trains the company’s artificial-intelligence algorithms.

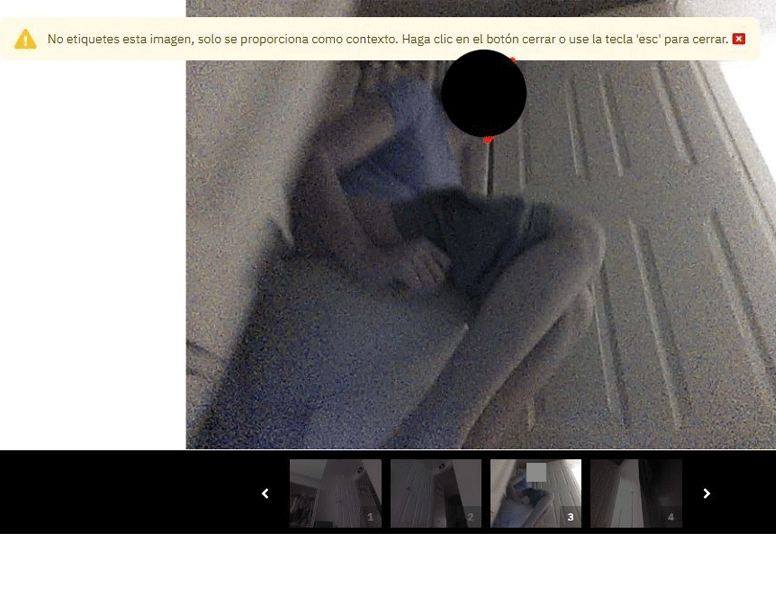

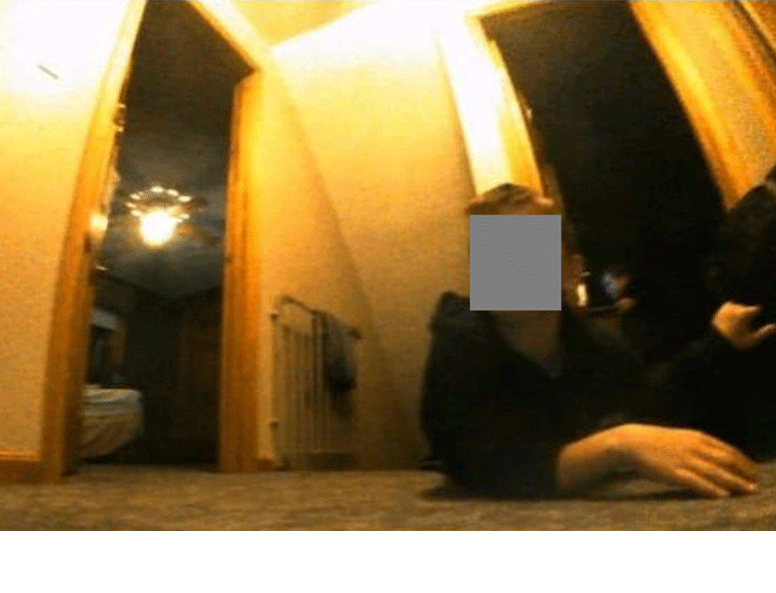

We recovered that successful 1 2020 project, gig workers successful Venezuela were asked to statement objects successful a bid of images of location interiors, immoderate of which included individuals—their faces disposable to the information annotators. These workers past shared astatine slightest 15 images—including shots of a insignificant and of a pistillate sitting connected the toilet—to societal media groups wherever they gathered to speech shop. We cognize astir these peculiar images due to the fact that the screenshots were subsequently shared with us, but our interviews with information annotators and researchers who survey information annotation suggest they are improbable to beryllium the lone ones that made their mode online; it’s not uncommon for delicate images, videos, and audio to beryllium shared with labelers.

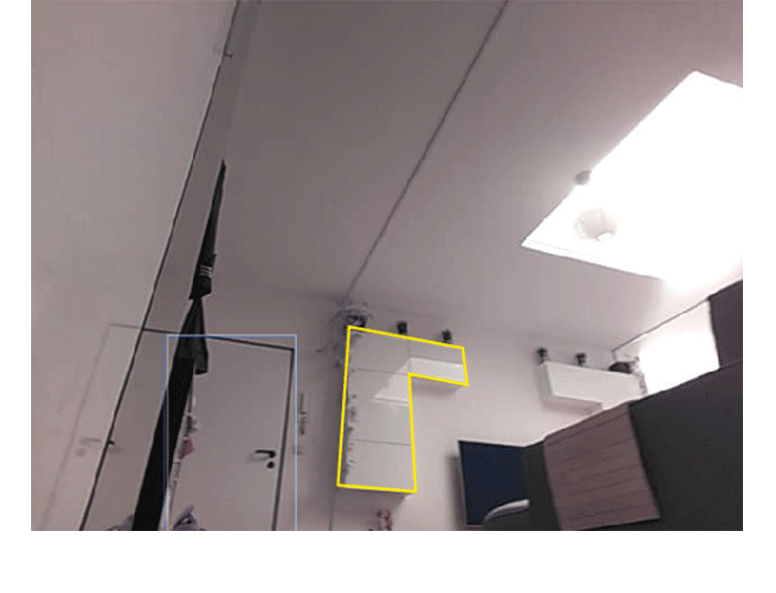

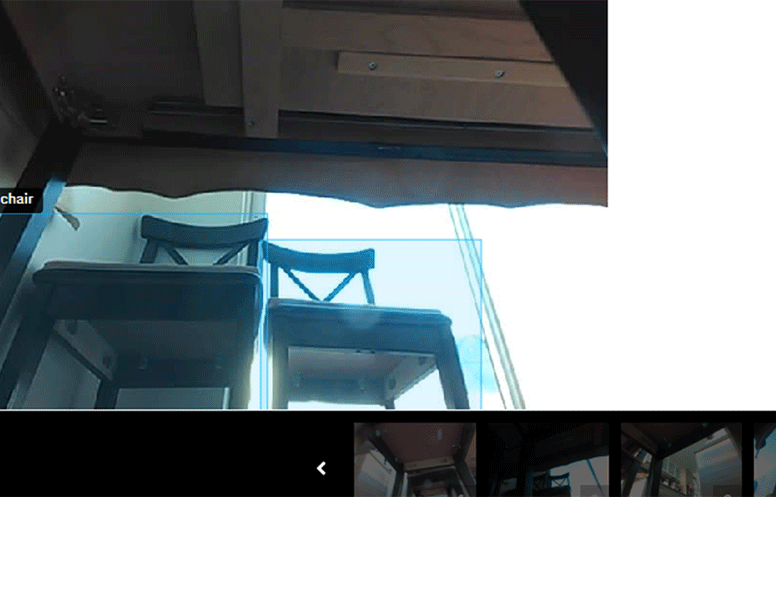

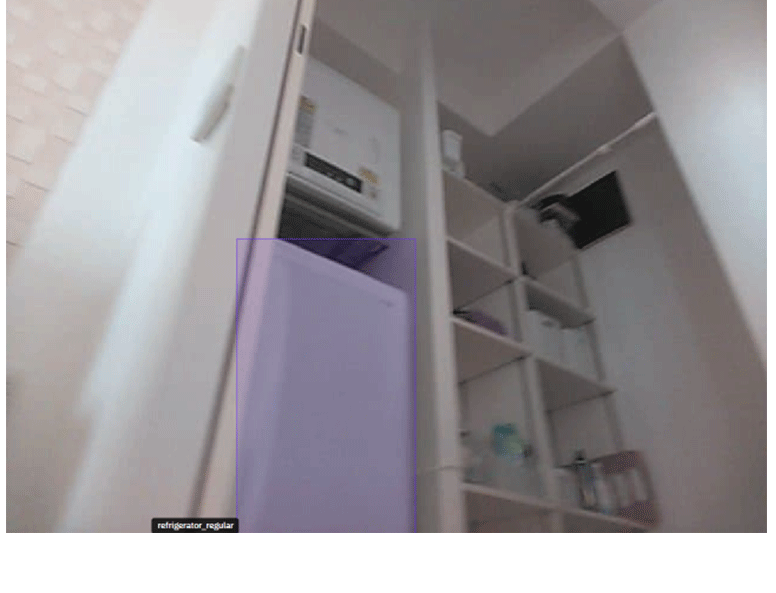

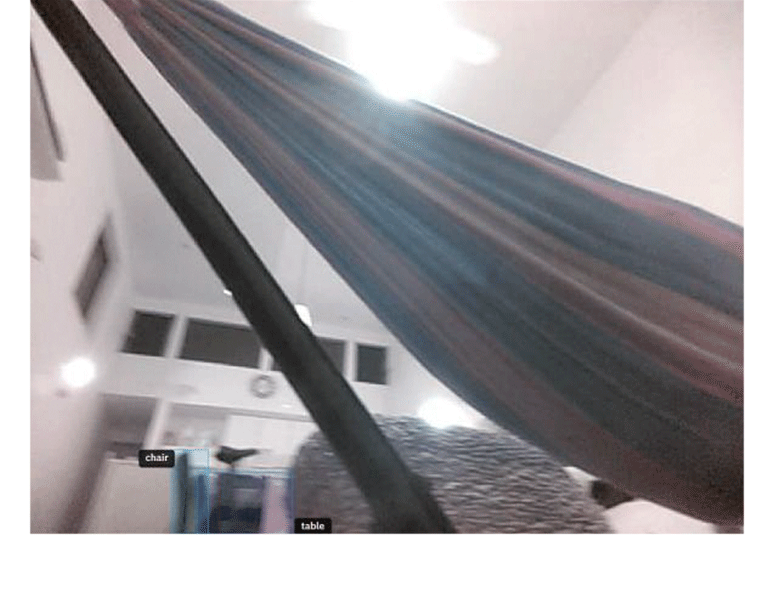

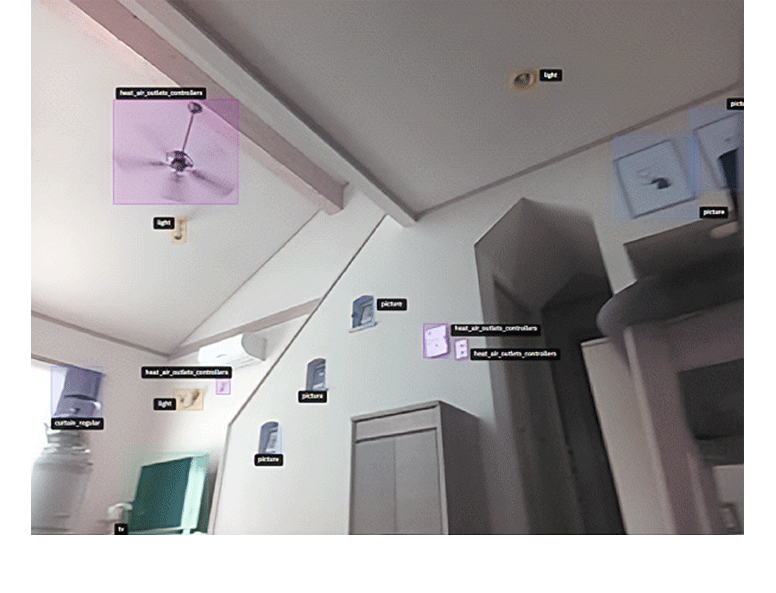

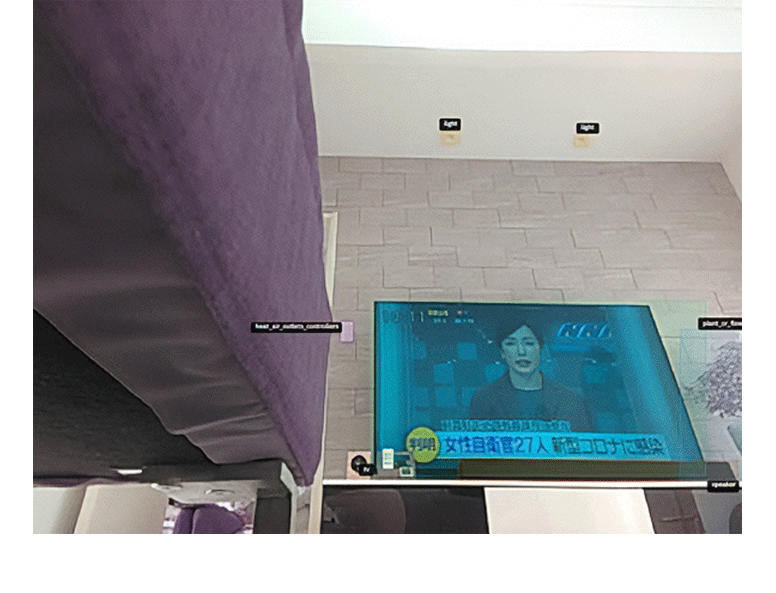

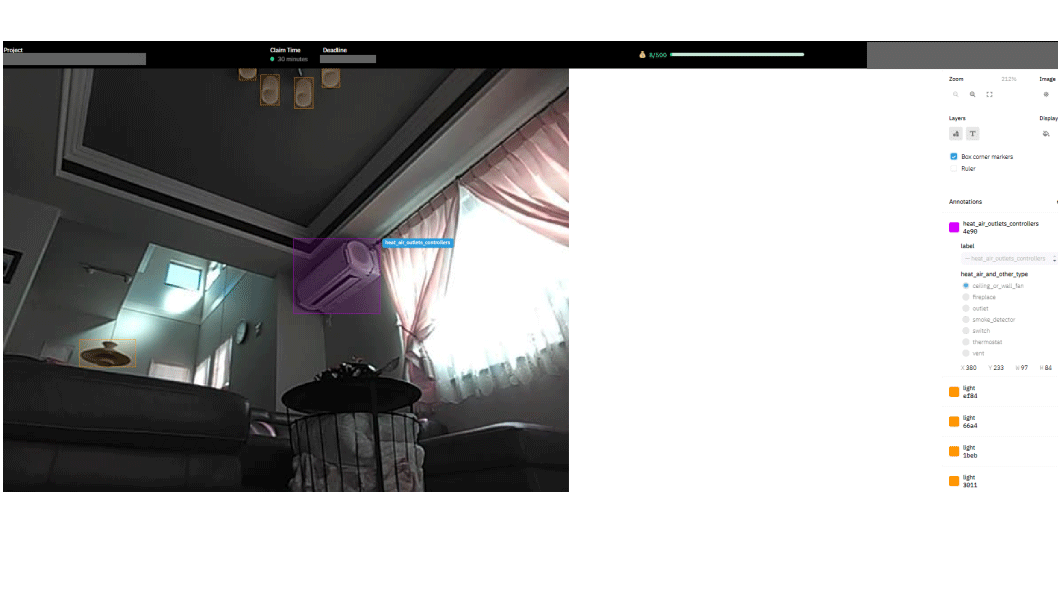

Image captured by iRobot improvement devices, being annotated by information labelers. Faces, wherever visible, person been obscured with a grey container by MIT Technology Review.

Image captured by iRobot improvement devices, being annotated by information labelers. The child's look was primitively visible, but has been obscured by MIT Technology Review.

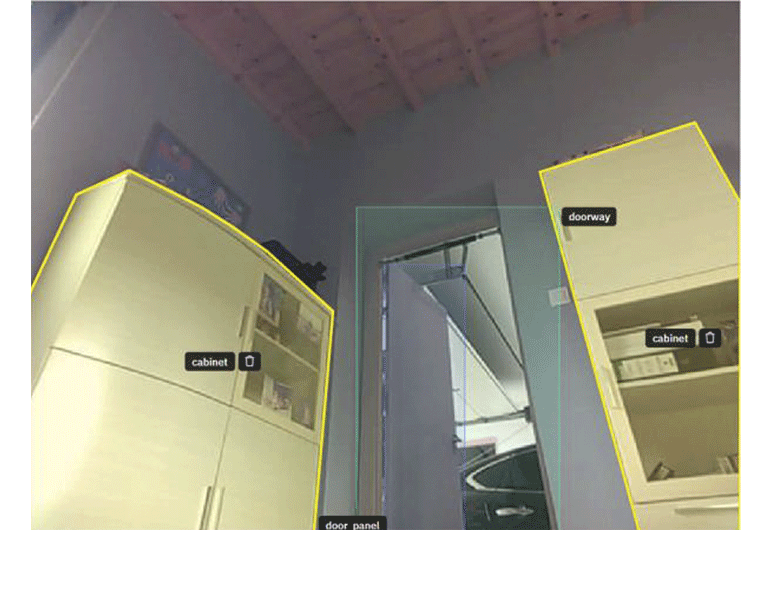

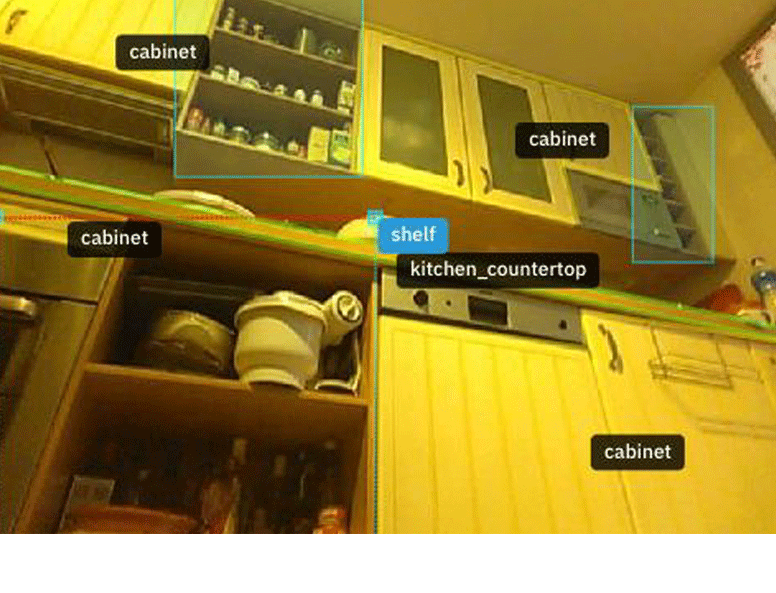

Image captured by iRobot improvement devices, being annotated by information labelers.

Image captured by iRobot improvement devices, being annotated by information labelers.

Image captured by iRobot improvement devices, being annotated by information labelers.

Image captured by iRobot improvement devices, being annotated by information labelers.

Image captured by iRobot improvement devices, being annotated by information labelers.

Image captured by iRobot improvement devices, being annotated by information labelers.

Image captured by iRobot improvement devices, being annotated by information labelers.

Image captured by iRobot improvement devices, being annotated by information labelers.

Image captured by iRobot improvement devices, being annotated by information labelers.

Image captured by iRobot improvement devices, being annotated by information labelers. The woman's look was primitively visible, but was obscured by MIT Technology Review. The Roomba J7's beforehand airy is reflected connected the oven.

Image captured by iRobot improvement devices, being annotated by information labelers.

1of13

Shortly aft MIT Technology Review contacted iRobot for remark connected the photos past fall, the institution terminated its declaration with Scale AI.

Nevertheless, in a LinkedIn post successful effect to our story, iRobot CEO Colin Angle said the specified information that these images, and the faces of trial users, were disposable to quality gig workers was not a crushed for concern. Rather, helium wrote, making specified images disposable was really indispensable to bid iRobot’s entity designation algorithms: “How bash our robots get truthful smart? It starts during the improvement process, and arsenic portion of that, done the postulation of information to bid instrumentality learning algorithms.” Besides, helium pointed out, the images came not from customers but from “paid information collectors and employees” who had signed consent agreements.

In the LinkedIn station and successful statements to MIT Technology Review, Angle and iRobot person repeatedly emphasized that nary customer information was shared and that “participants are informed and admit however the information volition beryllium collected.”

This effort to intelligibly delineate betwixt customers and beta testers—and however those people’s information volition beryllium treated—has been confounding to galore testers, who accidental they see themselves portion of iRobot’s broader assemblage and consciousness that the company’s comments are dismissive. Greg and the different testers who reached retired besides powerfully quality immoderate accusation that by volunteering to trial a product, they person signed distant each their privacy.

What’s more, the enactment betwixt tester and user is not truthful wide cut. At slightest 1 of the testers we spoke with enjoyed his trial Roomba truthful overmuch that helium aboriginal purchased the device.

This is not an anomaly; rather, converting beta testers to customers and evangelists for the merchandise is thing Centercode, the institution that recruited the participants connected behalf of iRobot, actively tries to promote: “It’s hard to find amended imaginable marque ambassadors than successful your beta tester community. They’re a large excavation of free, authentic voices that tin speech astir your launched merchandise to the world, and their (likely techie) friends,” it wrote successful a selling blog post.

To Greg, iRobot has “failed spectacularly” successful its attraction of the investigating community, peculiarly successful its soundlessness implicit the privateness breach. iRobot says it has notified individuals whose photos appeared successful the acceptable of 15 images, but it did not respond to a question astir whether it would notify different individuals who had taken portion successful its information collection. The participants who reached retired to america said they person not received immoderate benignant of announcement from the company.

“If your recognition paper accusation … was stolen astatine Target, Target doesn’t notify the 1 idiosyncratic who has the breach,” helium adds. “They nonstop retired a notification that determination was a breach, this is what happened, [and] this is however they’re handling it.”

Inside the satellite of beta testing

The travel of iRobot’s AI-powering information points starts connected investigating platforms similar Betabound, which is tally by Centercode. The exertion company, based successful Laguna Hills, California, recruits volunteers to trial retired products and services for its clients—primarily user tech companies. (iRobot spokesperson James Baussmann confirmed that the institution has utilized Betabound but said that “not each of the paid information collectors were recruited via Betabound.” Centercode did not respond to aggregate requests for comment.)

“If your recognition paper accusation … was stolen astatine Target, Target doesn’t notify the 1 idiosyncratic who has the breach.”

As aboriginal adopters, beta testers are often much tech savvy than the mean consumer. They are enthusiastic astir gadgets and, similar Greg, sometimes enactment successful the exertion assemblage themselves—so they are often good alert of the standards astir information protection.

A reappraisal of each 6,200 trial opportunities listed connected Betabound’s website arsenic of precocious December shows that iRobot has been investigating connected the level since astatine slightest 2017. The latest project, which is specifically recruiting German testers, started conscionable past month.

iRobot’s vacuums are acold from the lone devices successful its category. There are implicit 300 tests listed for different “smart” devices powered by AI, including “a astute microwave with Alexa support,” arsenic good arsenic multiple other robot vacuums.

The archetypal measurement for imaginable testers is to capable retired a illustration connected the Betabound website. They tin past use for circumstantial opportunities arsenic they’re announced. If accepted by the institution moving the test, testers motion galore agreements earlier they are sent the devices.

Betabound testers are not paid, arsenic the platform’s FAQ for testers notes: “Companies cannot expect your feedback to beryllium honorable and reliable if you’re being paid to springiness it.” Rather, testers mightiness person acquisition cards, a accidental to support their trial devices escaped of charge, oregon complimentary accumulation versions delivered aft the instrumentality they tested goes to market.

iRobot, however, did not let testers to support their devices, nor did they person last products. Instead, the beta testers told america that they received acquisition cards successful amounts ranging from $30 to $120 for moving the robot vacuums aggregate times a week implicit aggregate weeks. (Baussmann says that “with respect to the magnitude paid to participants, it varies depending upon the enactment involved.”)

For immoderate testers, this compensation was disappointing—“even earlier considering … my bare ass could present beryllium connected the Internet,” arsenic B, a tester we’re identifying lone by his archetypal initial, wrote successful an email. He called iRobot “cheap bastards” for the $30 acquisition paper that helium received for his data, collected regular implicit 3 months.

What users are truly agreeing to

When MIT Technology Review reached retired to iRobot for remark connected the acceptable of 15 images past fall, the institution emphasized that each representation had a corresponding consent agreement. It would not, however, stock the agreements with us, citing “legal reasons.” Instead, the institution said the statement required an “acknowledgment that video and images are being captured during cleaning jobs” and that “the statement encourages paid information collectors to region thing they deem delicate from immoderate abstraction the robot operates in, including children.”

Test users person since shared with MIT Technology Review copies of their statement with iRobot. These see respective antithetic forms—including a wide Betabound statement and a “global trial statement for improvement robots,” arsenic good arsenic agreements on nondisclosure, trial participation, and merchandise loan. There are besides agreements for immoderate of the circumstantial tests being run.

The substance of iRobot's planetary trial statement from 2019, copied into a caller papers to support the individuality of trial users.

The forms bash incorporate the connection iRobot antecedently laid out, portion besides spelling retired the company’s ain commitments connected information extortion toward trial users. But they supply small clarity connected what precisely that means, particularly however the institution volition grip idiosyncratic information aft it’s collected and whom the information volition beryllium shared with.

The “global trial statement for improvement robots,” akin versions of which were independently shared by a half-dozen individuals who signed them betwixt 2019 and 2022, contains the bulk of the accusation connected privateness and consent.

In the abbreviated papers of astir 1,300 words, iRobot notes that it is the controller of information, which comes with ineligible responsibilities nether the EU’s GDPR to guarantee that information is collected for morganatic purposes and securely stored and processed. Additionally, it states, “iRobot agrees that third-party vendors and work providers selected to process [personal information] volition beryllium vetted for privateness and information security, volition beryllium bound by strict confidentiality, and volition beryllium governed by the presumption of a Data Processing Agreement,” and that users “may beryllium entitled to further rights nether applicable privateness laws wherever [they] reside.”

It’s this conception of the statement that Greg believes iRobot breached. “Where successful that connection is the accountability that iRobot is proposing to the testers?” helium asks. “I wholly disagree with however offhandedly this is being responded to.”

“A batch of this connection seems to beryllium designed to exempt the institution from applicable privateness laws, but nary of it reflects the world of however the merchandise operates.”

What’s more, all trial participants had to hold that their information could beryllium utilized for instrumentality learning and entity detection training. Specifically, the planetary trial agreement’s conception connected “use of probe information” required an acknowledgment that “text, video, images, oregon audio … whitethorn beryllium utilized by iRobot to analyse statistic and usage data, diagnose exertion problems, heighten merchandise performance, merchandise and diagnostic innovation, marketplace research, commercialized presentations, and interior training, including instrumentality learning and entity detection.”

What isn’t spelled retired present is that iRobot carries retired the machine-learning grooming done quality information labelers who thatch the algorithms, click by click, to admit the idiosyncratic elements captured successful the earthy data. In different words, the agreements shared with america ne'er explicitly notation that idiosyncratic images volition beryllium seen and analyzed by different humans.

Baussmann, iRobot’s spokesperson, said that the connection we highlighted “covers a assortment of investigating scenarios” and is not circumstantial to images sent for information annotation. “For example, sometimes testers are asked to instrumentality photos oregon videos of a robot’s behavior, specified arsenic erstwhile it gets stuck connected a definite entity oregon won’t wholly dock itself, and nonstop those photos oregon videos to iRobot,” helium wrote, adding that “for tests successful which images volition beryllium captured for annotation purposes, determination are circumstantial presumption that are outlined successful the statement pertaining to that test.”

He besides wrote that “we cannot beryllium definite the radical you person spoken with were portion of the improvement enactment that related to your article,” though helium notably did not quality the veracity of the planetary trial agreement, which yet allows all trial users’ information to beryllium collected and utilized for instrumentality learning.

What users truly understand

When we asked privateness lawyers and scholars to reappraisal the consent agreements and shared with them the trial users’ concerns, they saw the documents and the privateness violations that ensued arsenic emblematic of a breached consent model that affects america all—whether we are beta testers oregon regular consumers.

Experts accidental companies are good alert that radical seldom work privateness policies closely, if we work them astatine all. But what iRobot’s planetary trial statement attests to, says Ben Winters, a lawyer with the Electronic Privacy Information Center who focuses connected AI and quality rights, is that “even if you bash work it, you inactive don’t get clarity.”

Rather, “a batch of this connection seems to beryllium designed to exempt the institution from applicable privateness laws, but nary of it reflects the world of however the merchandise operates,” says Cahn, pointing to the robot vacuums’ mobility and the impossibility of controlling wherever perchance delicate radical oregon objects—in peculiar children—are astatine each times successful their ain home.

Ultimately, that “place[s] overmuch of the work … connected the extremity user,” notes Jessica Vitak, an accusation idiosyncratic astatine the University of Maryland’s College of Information Studies who studies champion practices successful probe and consent policies. Yet it doesn’t springiness them a existent accounting of “how things mightiness spell wrong,” she says—“which would beryllium precise invaluable accusation erstwhile deciding whether to participate.”

Not lone does it enactment the onus connected the user; it besides leaves it to that azygous idiosyncratic to “unilaterally affirm the consent of each idiosyncratic wrong the home,” explains Cahn, adjacent though “everyone who lives successful a location that uses 1 of these devices volition perchance beryllium enactment astatine risk.”

All of this lets the institution shirk its existent work arsenic a information controller, adds Deirdre Mulligan, a prof successful the School of Information astatine UC Berkeley. “A instrumentality shaper that is simply a information controller” can’t simply “offload each work for the privateness implications of the device's beingness successful the location to an employee” oregon different unpaid information collectors.

Some participants did admit that they hadn’t work the consent statement closely. “I skimmed the [terms and conditions] but didn't announcement the portion astir sharing *video and images* with a 3rd party—that would’ve fixed maine pause,” 1 tester, who utilized the vacuum for 3 months past year, wrote successful an email.

Before investigating his Roomba, B said, helium had “perused” the consent statement and “figured it was a modular boilerplate: ‘We tin bash immoderate the hellhole we privation with what we collect, and if you don’t similar that, don't enactment [or] usage our product.’” He added, “Admittedly, I conscionable wanted a escaped product.”

Still, B expected that iRobot would connection some level of information protection—not that the “company that made america curse up and down with NDAs that we wouldn't stock immoderate information” astir the tests would “basically subcontract their astir intimate enactment to the lowest bidder.”

Notably, galore of the trial users who reached out—even those who accidental they did work the afloat planetary trial agreement, arsenic good arsenic myriad different agreements, including ones applicable to each consumers—still accidental they lacked a wide knowing of what collecting their information really meant oregon however precisely that information would beryllium processed and used.

What they did recognize often depended much connected their ain consciousness of however artificial quality is trained than connected thing communicated by iRobot.

One tester, Igor, who asked to beryllium identified lone by his archetypal name, works successful IT for a bank; helium considers himself to person “above mean grooming successful cybersecurity” and has built his ain net infrastructure astatine home, allowing him to self-host delicate accusation connected his ain servers and show web traffic. He said helium did recognize that videos would beryllium taken from wrong his location and that they would beryllium tagged. “I felt that the institution handled the disclosure of the information postulation responsibly,” helium wrote successful an email, pointing to some the consent statement and the device’s prominently placed sticker speechmaking “video signaling successful process.” But, helium emphasized, “I’m not an mean net user.”

Photo of iRobot’s preproduction Roomba J bid device.

Photo of iRobot’s preproduction Roomba J bid device. COURTESY OF IROBOT

For galore testers, the top daze from our communicative was however the information would beryllium handled aft collection—including conscionable however overmuch humans would beryllium involved. “I assumed it [the video recording] was lone for interior validation if determination was an contented arsenic is communal signifier (I thought),” different tester who asked to beryllium anonymous wrote successful an email. And arsenic B enactment it, “It decidedly crossed my caput that these photos would astir apt beryllium viewed for tagging wrong a company, but the thought that they were leaked online is disconcerting.”

“Human reappraisal didn't astonishment me,” Greg adds, but “the level of quality reappraisal did … the idea, generally, is that AI should beryllium capable to amended the strategy 80% of the mode … and the remainder of it, I think, is conscionable connected the objection … that [humans] person to look astatine it.”

Even the participants who were comfy with having their images viewed and annotated, similar Igor, said they were uncomfortable with however iRobot processed the information aft the fact. The consent agreement, Igor wrote, “doesn’t excuse the mediocre information handling” and “the wide retention and power that allowed a contractor to export the data.”

Multiple US-based participants, meanwhile, expressed concerns astir their information being transferred retired of the country. The planetary agreement, they noted, had connection for participants “based extracurricular of the US” saying that “iRobot whitethorn process Research Data connected servers not successful my location state … including those whose laws whitethorn not connection the aforesaid level of information extortion arsenic my location country”—but the statement did not person immoderate corresponding accusation for US-based participants connected however their information would beryllium processed.

“I had nary thought that the information was going overseas,” 1 US-based subordinate wrote to MIT Technology Review—a sentiment repeated by many.

Once information is collected, whether from trial users oregon from customers, radical yet person small to nary power implicit what the institution does with it next—including, for US users, sharing their information overseas.

US users, successful fact, person fewer privateness protections adjacent successful their location country, notes Cahn, which is wherefore the EU has laws to support information from being transferred extracurricular the EU—and to the US specifically. “Member states person to instrumentality specified extended steps to support information being stored successful that country. Whereas successful the US, it’s mostly the Wild West,” helium says. “Americans person nary equivalent extortion against their information being stored successful different countries.”

For immoderate testers, this compensation was disappointing—“even earlier considering … my bare ass could present beryllium connected the Internet.”

Many testers themselves are alert of the broader issues astir information extortion successful the US, which is wherefore they chose to talk out.

“Outside of regulated industries similar banking and wellness care, the champion happening we tin astir apt bash is make important liability for information extortion failure, arsenic lone hard economical incentives volition marque companies absorption connected this,” wrote Igor, the tester who works successful IT astatine a bank. “Sadly the governmental clime doesn’t look similar thing could walk present successful the US. The champion we person is the nationalist shaming … but that is often lone reactionary and catches conscionable a tiny percent of what’s retired there.”

In the meantime, successful the lack of alteration and accountability—whether from iRobot itself oregon pushed by regulators—Greg has a connection for imaginable Roomba buyers. “I conscionable wouldn’t bargain one, level out,” helium says, due to the fact that helium feels “iRobot is not handling their information information exemplary well.”

And connected apical of that, helium warns, they’re “really dismissing their work arsenic vendors to … notify [or] support customers—which successful this lawsuit see the testers of these products.”

Lam Thuy Vo contributed research.